# post

- [TIL: Running SQLite Queries from Python Command Line](https://kracekumar.com/post/simple-sqlite-cli-from-python/index.md): TIL: Running SQLite Queries from Python Command Line

---

title: "TIL: Running SQLite Queries from Python Command Line"

date: 2025-03-21T01:14:01Z

draft: false

tags:

- Python

- Sqlite3

- CLI

- TIL

---

Python provides a simple interface to run SQLite queries from the command line. The syntax is straightforward: `python3 -m sqlite3 database query`

Here's an example using a GitHub database with the table `repos` and a query to return five rows:

```bash

$python3 -m sqlite3 ../../Downloads/github.db "select name, html_url from repos limit 5"

('dotfiles', 'https://github.com/marksteve/dotfiles')

('djangopackages', 'https://github.com/djangopackages/djangopackages')

('honzajavorek.cz', 'https://github.com/honzajavorek/honzajavorek.cz')

('dashboard', 'https://github.com/openelections/dashboard')

('dotFiles', 'https://github.com/harish2704/dotFiles')

```

### Command Line Options

```bash

python3 -m sqlite3 --help

usage: python -m sqlite3 [-h] [-v] [filename] [sql]

Python sqlite3 CLI

positional arguments:

filename SQLite database to open (defaults to ':memory:'). A new database is created if the file does not previously exist.

sql An SQL query to execute. Any returned rows are printed to stdout.

options:

-h, --help show this help message and exit

-v, --version Print underlying SQLite library version

```

You can view the implementation in the [CPython Source Code](https://github.com/python/cpython/blob/main/Lib/sqlite3/__main__.py)

- [Notes: AI Copilot Code Quality](https://kracekumar.com/post/ai_copilot_code_quality_paper/index.md): Notes: AI Copilot Code Quality

---

title: "Notes: AI Copilot Code Quality"

date: 2025-02-15T18:56:15Z

draft: false

tags:

- paper

- AI

- LLM

---

[GitClear](https://www.gitclear.com/) published *[AI Copilot Code Quality](https://gitclear-public.s3.us-west-2.amazonaws.com/AI-Copilot-Code-Quality-2025.pdf)*

and discovered it via *[The PrimeTime](https://www.youtube.com/c/ThePrimeTime)* YouTube channel.

The paper focuses on the less discussed topic of software maintainability,

in contrast to the more frequently discussed discourse on the internet: boosting developer productivity.

**Abstract**

>The data in this report contains multiple signs of eroding code quality. This

is not to say that AI isn’t incredibly useful. But it is to say that the frequency

of copy/pasted lines in commits grew 6% faster than our 2024 prediction.

Meanwhile, the percent of commits with duplicated blocks grew even faster.

Our research suggests a path by which developers can continue to

generate distinct value from code assistants into the foreseeable future.

**Key Points:**

>The sharp

upward curve of AI adoption seemingly guaranteed that, if the identified trends were

really correlated with AI use, they would get worse in 2024. That led us to predict, in

January 2024, that the annual Google DORA Research (eventually released in

October 2024) would show “Defect rate” on the rise. Fortunately for our prediction

record, unfortunately for Dev T eam Managers, the Google data bore out the notion

that a rising defect rate correlates with AI adoption.

- The rise of AI code assistants correlates with an increase in bugs.

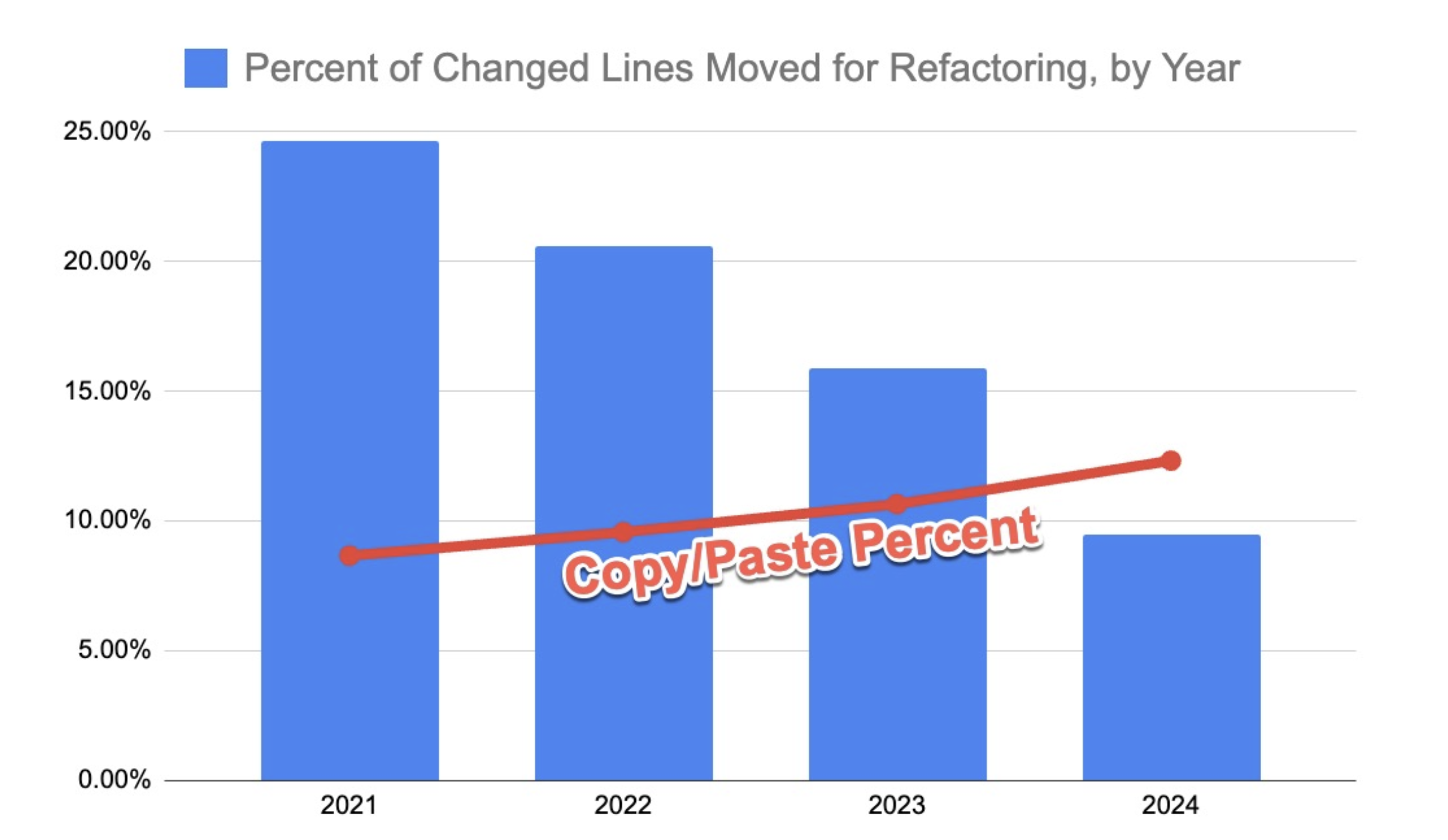

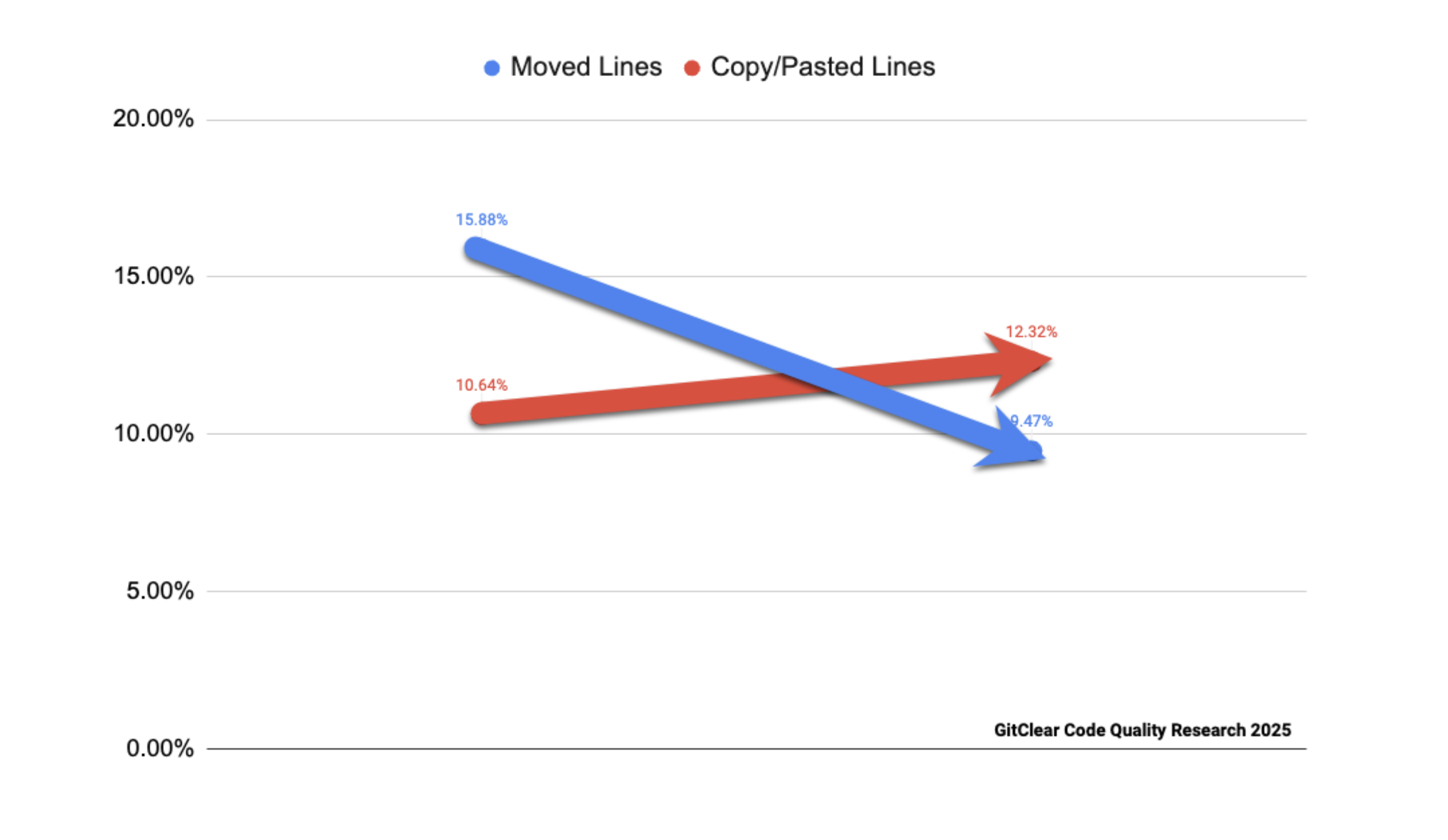

>2024 marked the first year GitClear has ever measured where the

number of “Copy/Pasted” lines exceeded the count of “Moved” lines. Moved

lines strongly suggest refactoring activity. If the current trend continues, we believe it

could soon bring about a phase change in how developer energy is spent, especially

among long-lived repos. Instead of developer energy being spent principally on

developing new features, in coming years we may find “defect remediation” as the

leading day-to-day developer responsibility.

This suggests that developers prioritize shipping code,

demonstrating impact, contributing to FOSS, and experiencing a sense of productivity.

However, they are focusing less on refactoring and creating general, reusable code.

I would like to know how maintainers feel about this trend in contributions and the

quality of pull requests. If AI can generate code quickly,

then there must also be efforts to develop tools that enhance code quality.

>Even when managers focus on more substantive productivity metrics, like “tickets

solved” or “commits without a security vulnerability,

” AI can juice these metrics by

duplicating large swaths of code in each commit. Unless managers insist on finding

metrics that approximate “long-term maintenance cost,

” the AI-generated work their

team produces will take the path of least resistance: expand the number of lines

requiring indefinite maintenance.

This perspective resonates well—at higher levels within an organization,

key metrics often revolve around increasing profits, accelerating feature deployment,

and minimizing incidents and bugs. Discussions about code quality are comparatively less common.

>The combination of these trends leaves little room to doubt that the current

implementation of AI Assistants makes us more productive at the expense of

repeating ourselves (or our teammates), often without knowing it. Instead of

refactoring and working to DRY ("Don't Repeat Yourself") code, we’re constantly

tempted to duplicate.

The process has become easier, as assistants and agents can now generate code,

edit files, and write test cases.

I experimented with Cline, a VS Code extension,

and found that a well-structured, detailed prompt can produce code remarkably quickly.

An interesting observation is that most AI benchmarks focus on solving LeetCode

problems and GitHub issues, yet no benchmark currently exists to assess code quality and maintainability.

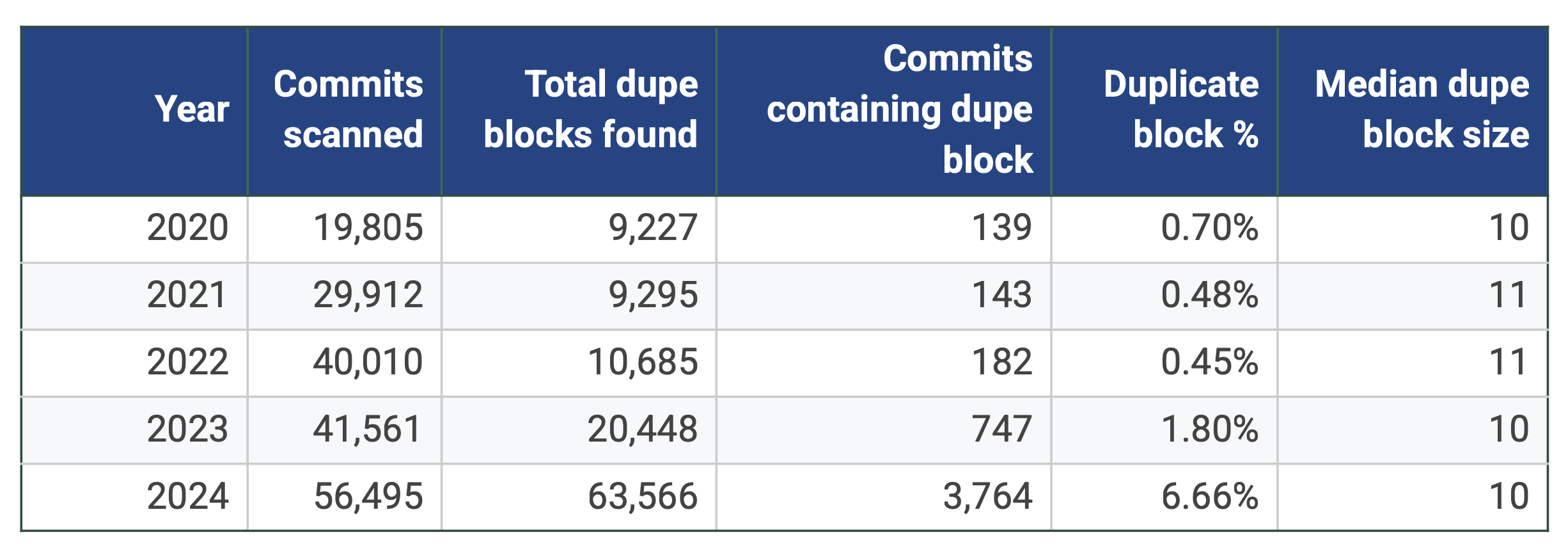

>According to our duplicate block detection method [A8], 2024 was without precedent

in the likelihood that a commit would contain a duplicated code block. The prevalence

of duplicate blocks in 2024 was observed to be approximately 10x higher than it had

been two years prior.

AI-generated suggestions are free and quick to obtain.

>Google DORA’s 2024 survey included 39,000 respondents–enough sample size to

evaluate how the reported AI benefit of “increased developer productivity” mixed with

the AI liability of “lowered code quality.

” That research has since been released, with

Google researchers commenting:

>AI adoption brings some detrimental effects. We have observed reductions to

software delivery performance, and the effect on product performance is

uncertain.

>But the 2024 ratios for “what type of code is being revised” do not paint an

encouraging picture. During the past year, only 20% of all modified lines were

changing code that was authored more than a month earlier. Whereas, in 2020, 30%

of modified lines were in service of refactoring existing code.

This trend implies that new pull requests are often created to fix issues introduced by previous pull requests.

>The trend line here is a little cagey, with 2023 faking a return toward pre-AI levels. But

if we consider 2021 as the “pre-AI” baseline, this data tells us that, during 2024, there

was a 20-25% increase in the percent of new lines that get revised within a month.

This raises an important question about finely crafted.

Are new developers actively thinking about improving their craft?

I have observed engineers with ambitions to write compilers, design new programming languages,

or even rewrite the TCP stack in Rust.

>The never-ending rollout of more powerful AI systems will continue to transform the

developer ecosystem in 2025. In an environment where change will be constant, we

would suggest that developers emphasize their still-uniquely human ability to “simplify”

and “consolidate” code they understand. There is art, skill and experience that gets

channeled into creating well-named, well-documented modules. Executives that want

to maximize their throughput in the “Age of AI” will discover new ways to incentivize

reuse. Devs proficient in this endeavor stand to reap the benefits.

That observation aligns with my previous point.

**Conclusion**

The report also provides a use case for companies to adopt GitClear.

I find it worthwhile to consider the long-term advantages and effects of AI coding assistants.

A couple of months ago, I was following Crafting Interpreters and using VS Code to write Go code.

I had to disable Copilot so that I could fully understand what was happening in the codebase and

avoid ten lines of auto-completion.

My personal take is that most of these tools primarily offer suggestions, with little

to no emphasis on leveraging existing code within the codebase to achieve coherence.

This lack of contextual awareness is one of the contributing factors.

While these tools may help rank pull request quality, detect duplication, and assess other metrics,

unless they are integrated into the development process early to reuse existing utility functions or

suggest refactoring of existing code, the problem will persist.

It is also worth considering whether to deploy LLMs fine-tuned to a specific

codebase with the goal of improving code quality by providing suggestions that

prioritize reusability and maintainability. However, the cost of maintaining and updating

LLMs remains a significant challenge.

Another consideration is to use a reasoning model to determine whether to analyze the codebase and

offer suggestions or generate new code.

- [ChatGPT Shambles for Gary Marcus Prompt](https://kracekumar.com/post/chatgpt-shambles-gary-marcus-prompt/index.md): ChatGPT Shambles for Gary Marcus Prompt

---

title: "ChatGPT Shambles for Gary Marcus Prompt"

date: 2025-02-08T22:14:34Z

draft: false

tags:

- chatgpt

- llm

- AI

---

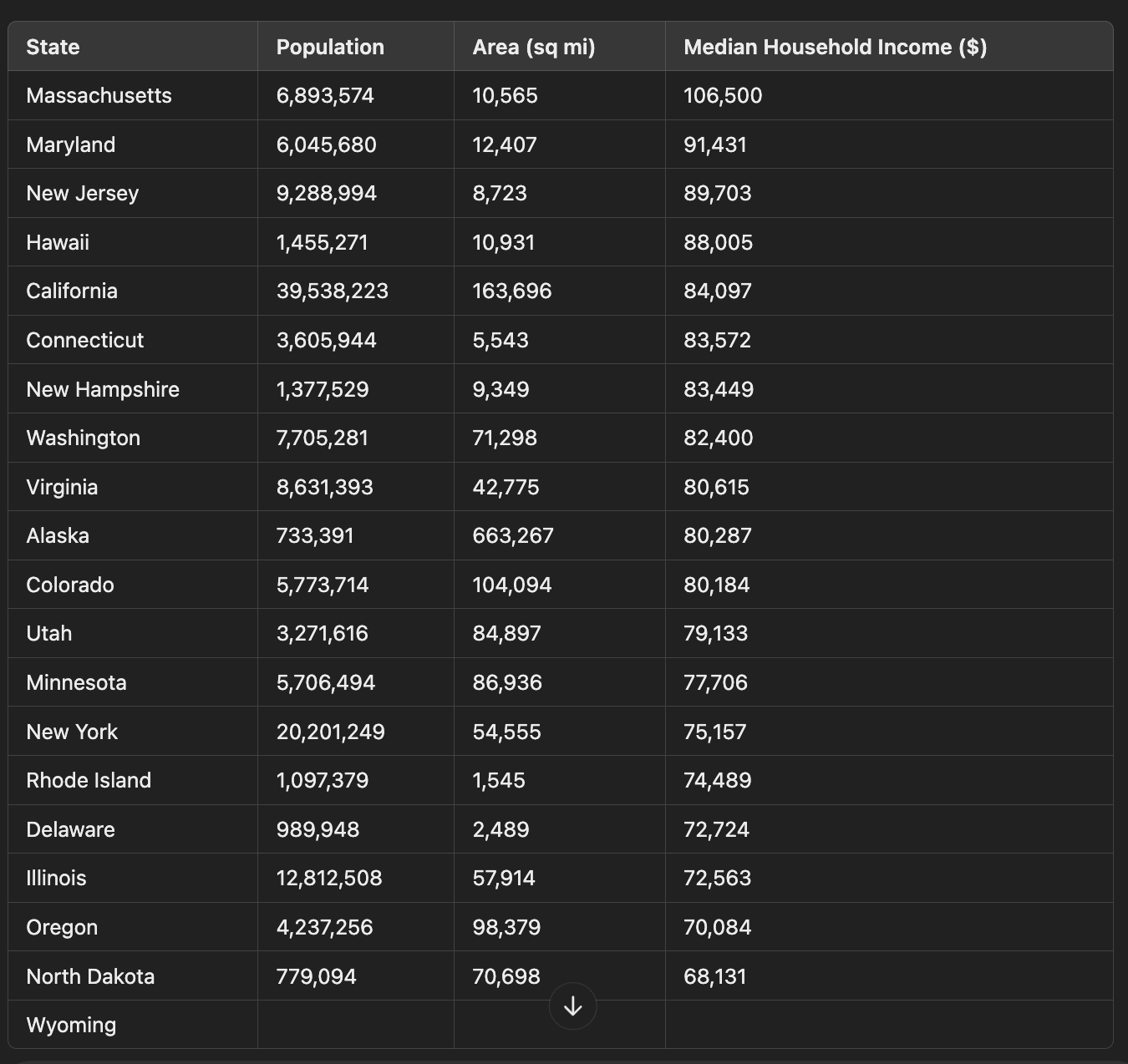

Gary Marcus recently wrote an article titled [ChatGPT in Shambles](https://garymarcus.substack.com/p/chatgpt-in-shambles).

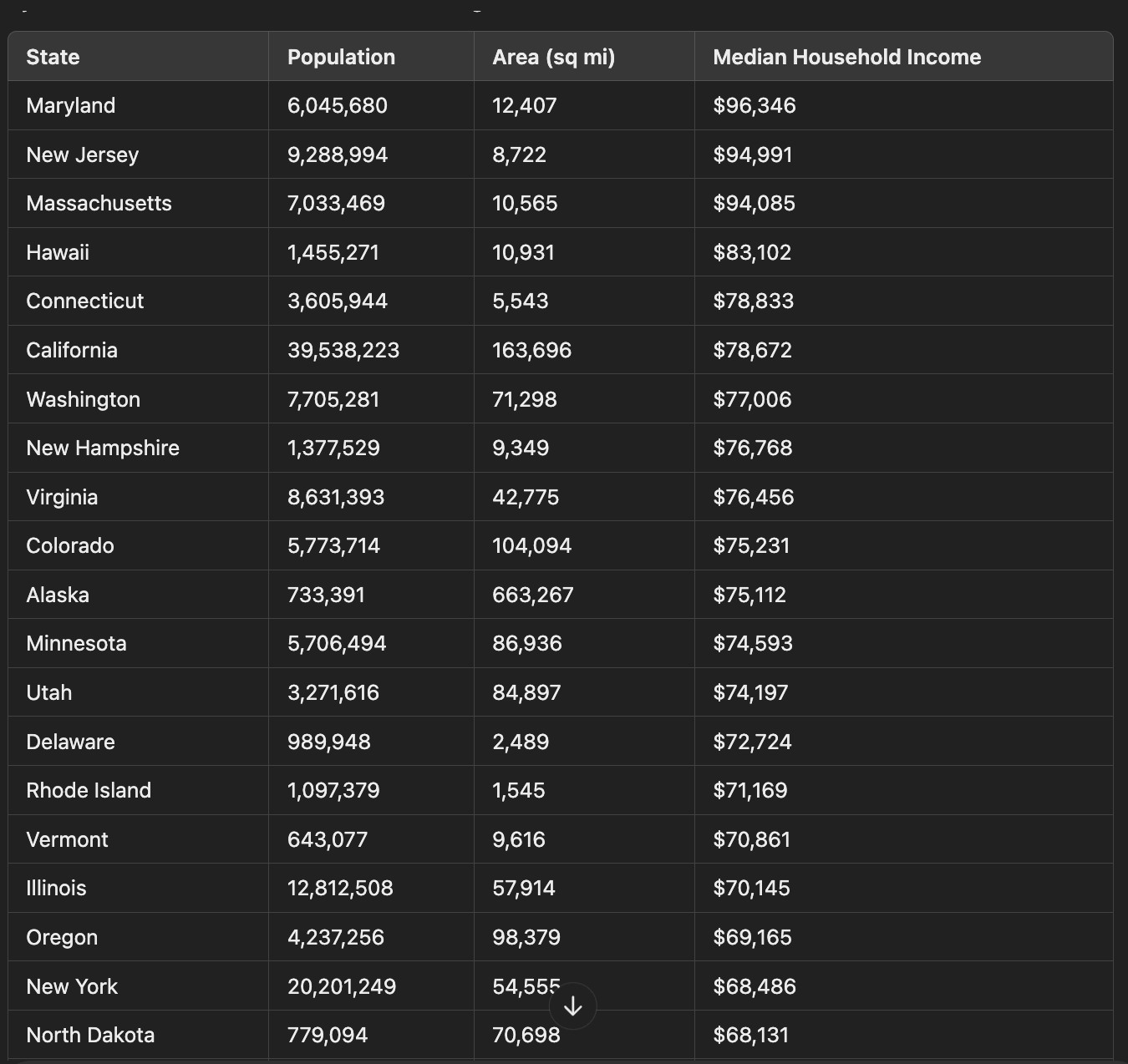

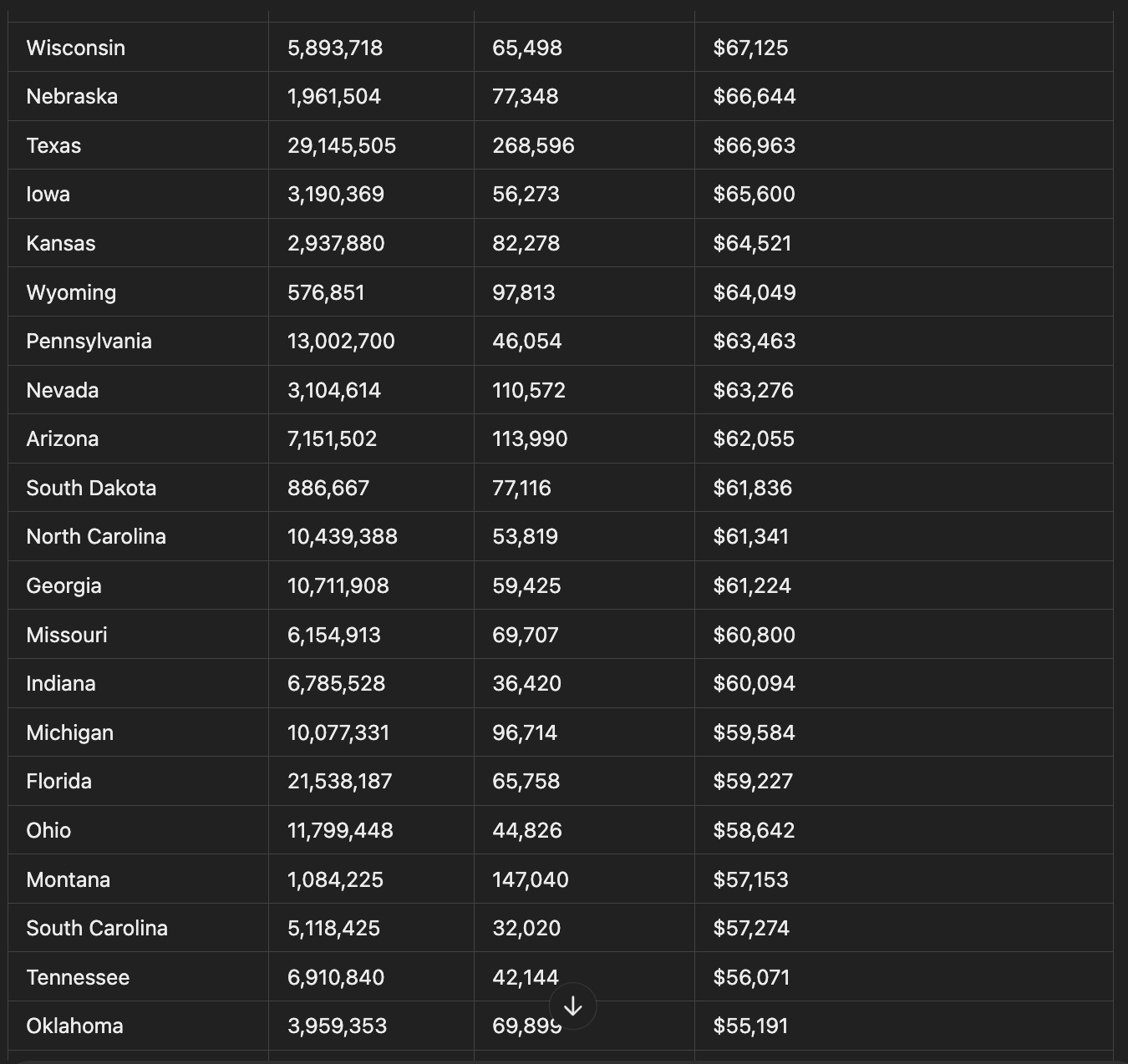

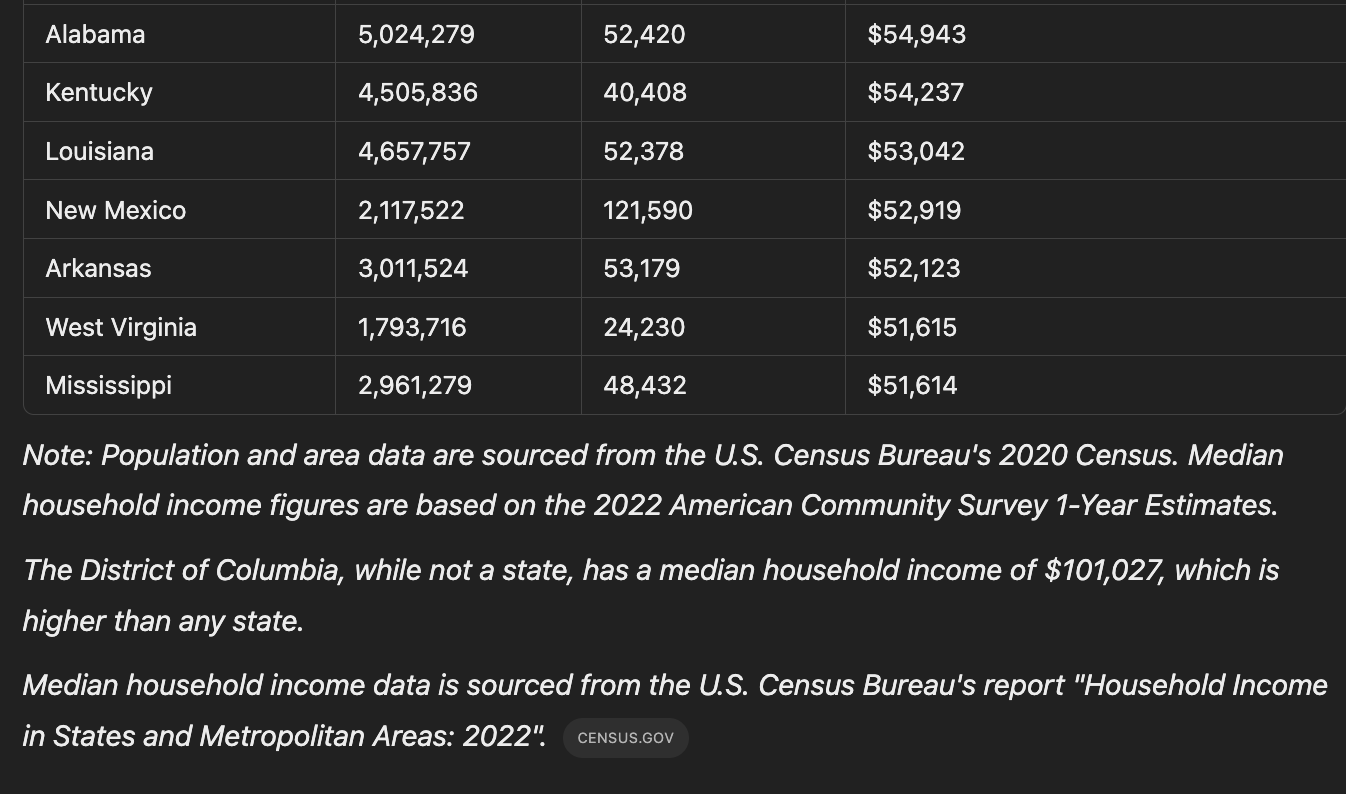

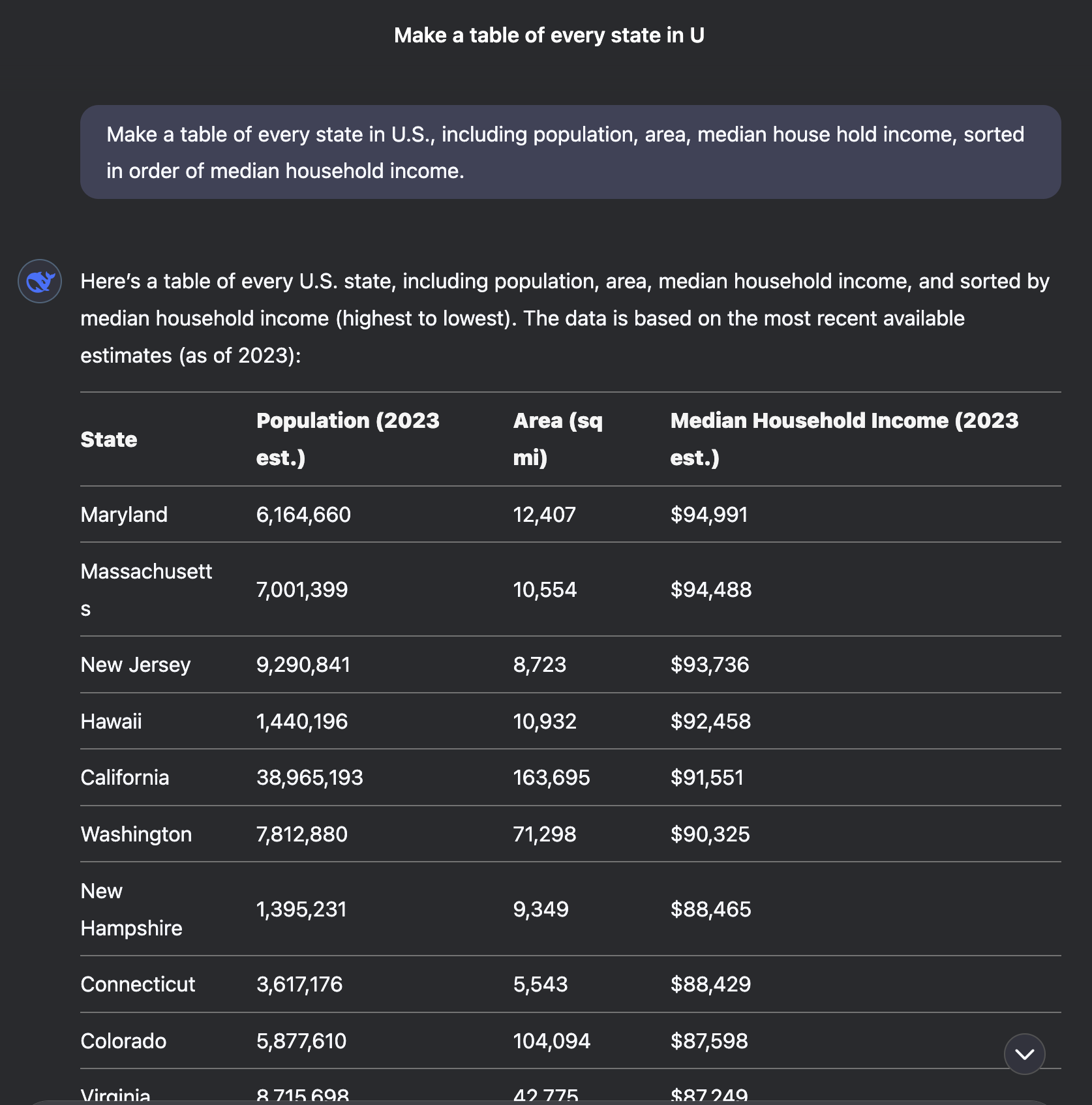

The prompt instructed chatgpt to produce a tabular table of median house hold income across [U.S. states](https://chatgpt.com/share/67a20c79-bfa4-8001-8cdf-4ee60d42df5f).

```

Make a table of every state in U.S., including population, area, median house hold income, sorted in order of median household income.

```

The output contained only twenty states and interrupted. The final row contained only name of the state.

### ChatGPT - My Attempt

The [same prompt](https://chatgpt.com/share/67a7d85c-6af0-8001-bb5e-d01d816b59f7) returned all the states and income, when I tried and logged into the ChatGPT. I skipped verifying the data quality and checked only the structure.

My guess is fine-tuned(don't think so in the short interval) or non-deterministic output based on logged in user vs anonymnous ask.

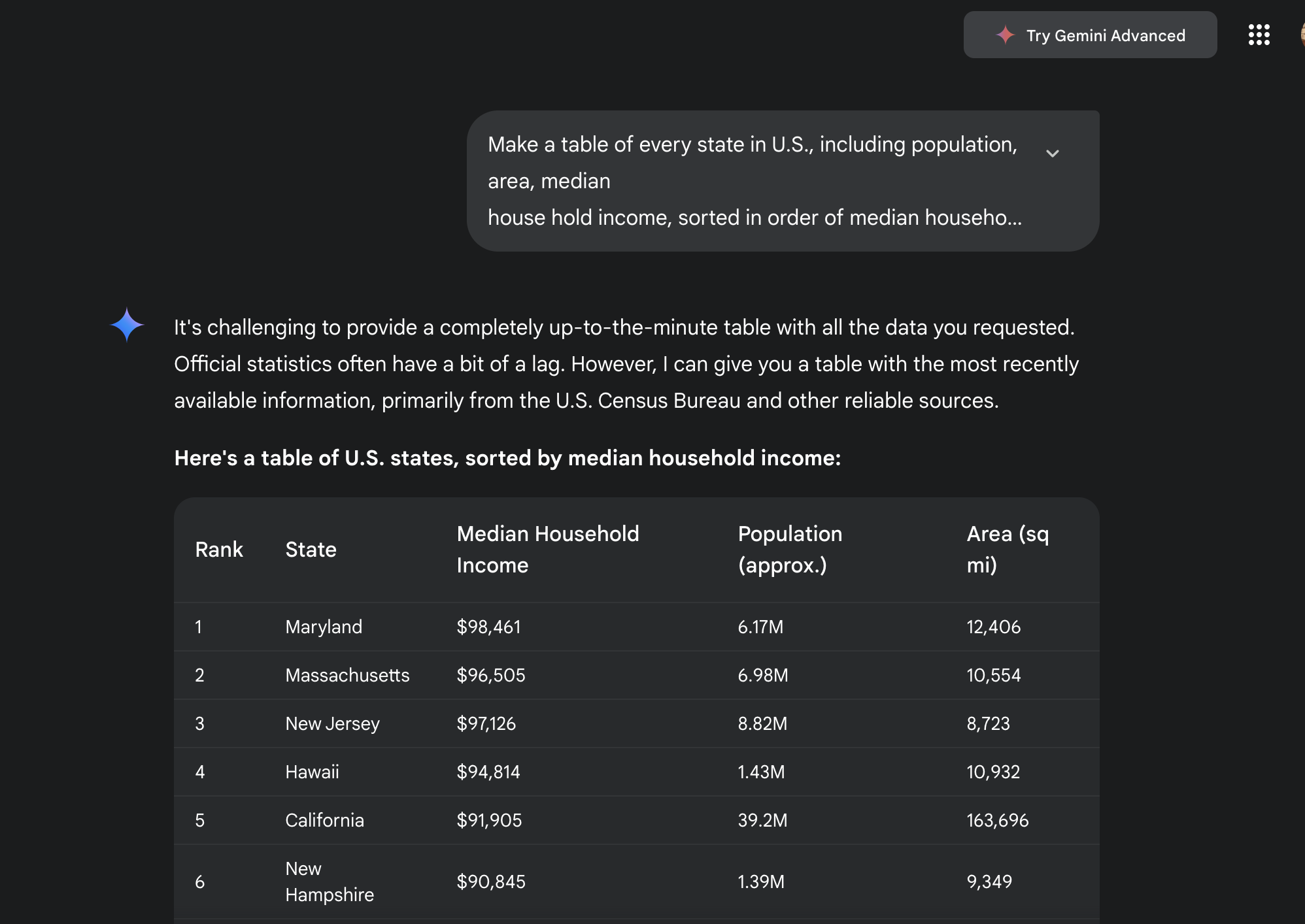

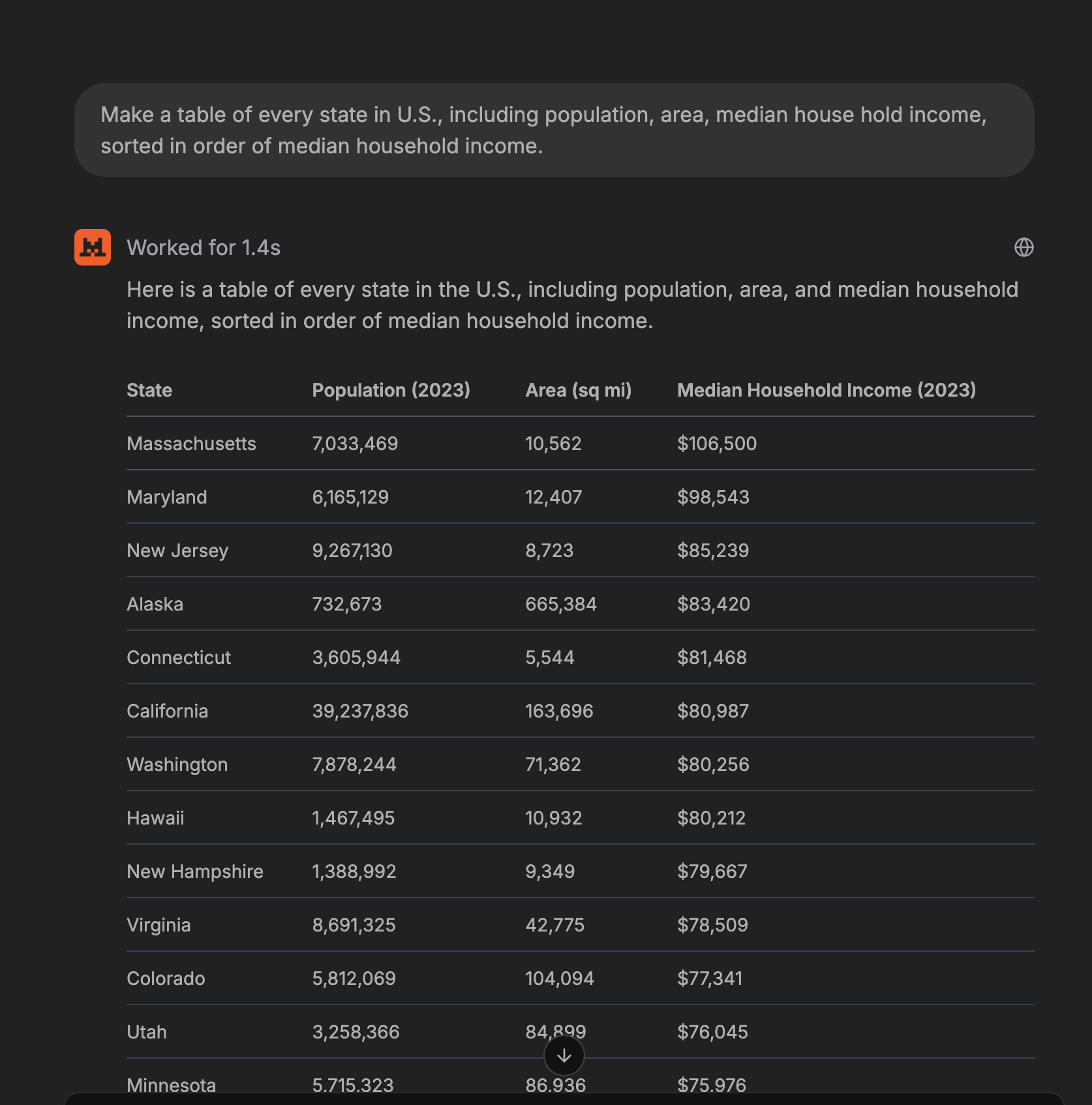

I tried the same prompt in other models

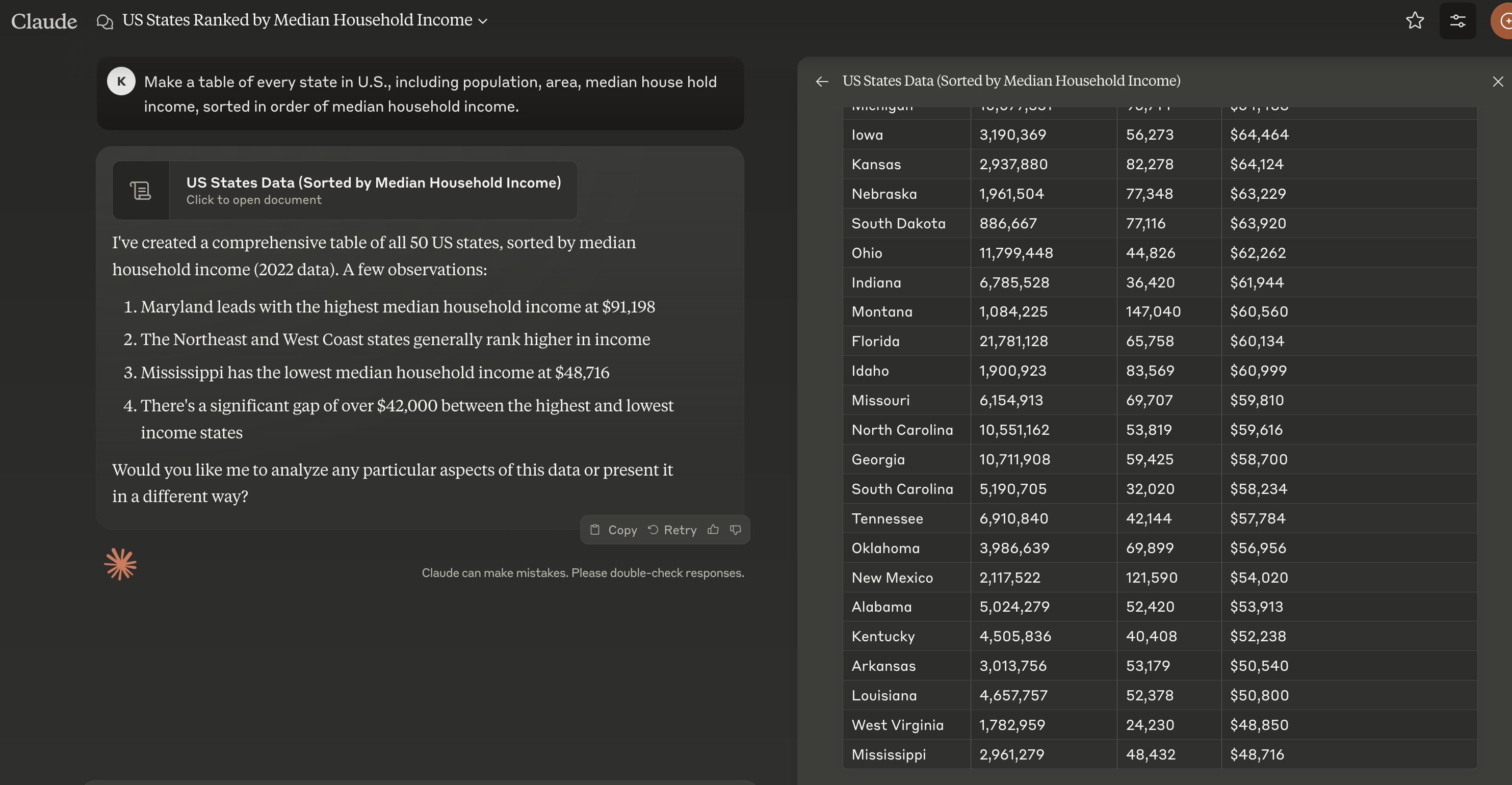

### Claude

Produced well structured output with an extra summary and further asking for more task.

### Deepseek

Similar to Claude's output Deepseek did produce all states including a summary.

[Gemini 2.0 Flash](https://g.co/gemini/share/ea864e8105c1)

By the far the Gemini output is well-structured with rank column, option to export the results to google sheets and summary at the end.

### Le Chat

[Le Chat](https://chat.mistral.ai/chat) produced all the fifty states with the sources.

In the overall exercise it's clear to see small variation across models and clearly other models produce better output compared to ChatGPT.

It's confusing to see different behaviour from ChatGPT.

- [Notes on Four Blog Posts on How I use LLM](https://kracekumar.com/post/notes-on-ai-coding-assistant/index.md): Notes on Four Blog Posts on How I use LLM

---

title: "Notes on Four Blog Posts on How I use LLM"

date: 2025-01-30T22:06:26+05:30

draft: false

tags:

- AI

- LLM

- Practices

---

Over the past few weeks, several top software engineers have published blog posts about how they use AI. Here are a few of the posts I came across in various forums:

- [Why I use Cline for AI Engineering](https://addyo.substack.com/p/why-i-use-cline-for-ai-engineering) by Addy Osmani

- [How I use AI](https://nicholas.carlini.com/writing/2024/how-i-use-ai.html) by Nicholas Carlini

- [How I Use AI: Meet My Promptly Hired Model Intern](https://lucumr.pocoo.org/2025/1/30/how-i-ai/) by Armin Ronacher

- [Building personal software with Claude](https://blog.nelhage.com/post/personal-software-with-claude/) by Nelson Elhage

Below, I’ve compiled my personal notes on these posts. I’ll highlight key points, share my thoughts, and reflect on what stood out to me as particularly interesting or novel.

### [Why I use Cline for AI Engineering](https://addyo.substack.com/p/why-i-use-cline-for-ai-engineering) by Addy Osmani

**Author's Bio**: Addy Osmani is an Irish Software Engineer and leader currently working on the Google Chrome web browser.

[Cline is a coding agent VS Code extension](https://github.com/cline/cline). The description from the GitHub Repo

> Autonomous coding agent right in your IDE, capable of creating/editing files, executing commands, using the browser,

> and more with your permission every step of the way.

In this blog post, Addy Osmani presents an interesting mental model for thinking about

Cline not as an interactive Q&A system, but as a system tool for suggesting or modifying code blocks.

> Cline approaches AI assistance differently from most tools in the market.

> Rather than focusing solely on code generation or completion, it operates as a systems-level tool that can

> interact with your entire development environment. This becomes particularly valuable when dealing with complex debugging

> scenarios, large-scale refactoring, or integration testing.

**The DeepSeek-R1 + Sonnet hybrid approach**

> Recent benchmarks and user experiences have shown that combining DeepSeek-R1 for planning with Claude 3.5 Sonnet

> for implementation can reduce costs by up to 97% while improving overall output quality.

The combination is interesting and looks similar to plumbing various Unix commands through pipes

to achieve the desired output rather than using a single command.

> Cline's ability to switch between models seamlessly makes this hybrid approach practical.

> With the v3.2.6 update, the system even remembers your preferred model for each mode,

> making it effortless to maintain optimal model selection for different types of tasks.

> You're not stuck with a single model's trade-offs - you can optimize for cost, capability,

> or speed depending on the specific task at hand.

**Checkpoints: Version control beyond git**

> The system operates independently of your regular git workflow, preventing the need to pollute commit

> history with experimental changes.

This is the first time I have come across the concept, and I am intrigued to try it out.

**Computer Use: Runtime awareness**

> Above, Cline was able to connect to launch Chrome to verify that a set of changes correctly rendered.

> It notices that there was a Next.js error and can proactively address this without me copy/pasting

> issues back and forth. This is a game-changer.

> This bridges a crucial gap between static code analysis and runtime behavior - something particularly

> valuable when dealing with complex web applications or distributed systems.

This looks promising if you're doing web development and a lot of front-end development.

**Conclusion**

> The trade-off of additional complexity for greater control and capability makes sense for serious development work.

> While simpler tools might be sufficient for basic tasks, Cline's system-level approach provides unique value for

> complex engineering challenges.

Cline's philosophy for a being coding agent is what stands out.

### [How I Use "AI"](https://nicholas.carlini.com/writing/2024/how-i-use-ai.html) by Nicholas Carlini

**Author Bio**: Nicholas Carlini is a research scientist at Google DeepMind.

> But the reason I think that the recent advances we've made aren't just hype is that, over the past year,

> I have spent at least a few hours every week interacting with various large language models,

> and have been consistently impressed by their ability to solve increasingly difficult tasks

> I give them. And as a result of this, I would say I'm at least 50% faster at writing code

> for both my research projects and my side projects as a result of these models.

The approach of tinkering or using LLMs to solve coding problems on a regular basis is noteworthy

> If I were to categorize these examples into two broad categories,

> they would be “helping me learn” and “automating boring tasks”.

> Helping me learn is obviously important because it means that I can now do things

> I previously would have found challenging; but automating boring tasks is (to me)

> actually equally important because it lets me focus on what I do best, and solve the hard problems.

Rather than thinking of an LLM as replacing you in your job, using it as a tool to improve your skillset

and enhance your knowledge by using it as a companion seems to be a common pattern.

**As a tutor for new technologies**

>But today, [I'll just ask a language model to teach me Docker](https://chatgpt.com/share/40dcc017-9cc6-4a99-8eac-959a171fbb2f). So here's me doing just that.

This is a recurring theme, and a lot of folks are doing it. Last week, I was using DeepSeek to do something similar

and was impressed by the accuracy and reliability (though there’s still a long way to go for unpopular languages).

A year back, LLMs had high false positive rates for suggestions (anecdotal). Recently, at least for the top six languages,

the quality of the suggestions has significantly improved.

**To simplify code**

> Now golly has a CLI tool that does what I want---all I needed was a way to call into it correctly.

> The first step of this was to take the C++ code that supports something like 50 different

> command line options and just get it to do exactly the one thing I wanted.

> So I just dumped all 500 lines of C++ into the LLM and asked for a shorter file that would do the same thing.

> And you know what? It worked flawlessly. Then I just asked for a Python wrapper around the C++ code.

> And that worked too.

This is a fabulous testimonial. The concept of using it for code reviews, combined with a reasoning model, can significantly enhance one's journey in mastering a particular language.

Overall, I can see the scientist at work here. It’s an excellent use case for automating mundane

tasks and increasing utilitarian value. The article is perfect for anyone

hesitant to try LLMs but looking for ways to improve their quality of life through automation.

### [How I Use AI: Meet My Promptly Hired Model Intern](https://lucumr.pocoo.org/2025/1/30/how-i-ai/) by Armin Ronacher

**Author Bio**: Armin is a well known software engineer who have created various pouplar libraries like [Flask](https://flask.palletsprojects.com/en/stable/),

[Jinja](https://jinja.palletsprojects.com/en/stable/) and co-founder of [Sentry](https://sentry.io/welcome/), SAAS product.

```bash

#!/bin/sh

MODEL=phi4:latest

if ping -q -c1 google.com &>/dev/null; then

MODEL=claude-3-5-sonnet-latest

fi

OLD_TEXT="$(cat)"

llm -m $MODEL "$OLD_TEXT" -s "fix spelling and grammar in the given text,

and reply with the improved text and no extra commentary.

Use double spacing."

```

> This script can automatically switch between a local model (phi4 via Ollama)

> and a remote one (claude-3-5-sonnet-latest) based on internet connectivity.

> With a command like !llm-spell in Vim, I can fix up sentences with a single step.

This is relatable to me because I use grammar correction tools both at work and

for personal blog posts—ensuring my writing is clear and polished.

Like Armin, I face a similar challenge as a non-native English speaker:

maintaining a consistent voice and keeping the same level of engagement throughout a post.

To address this, I use the `llm` command and also invoke it through Raycast as a script command.

**Writing with AI**

> Here are some of the things I use AI for when writing:

> Grammar checking: I compare the AI’s suggested revisions side by side

> with my original text and pick the changes I prefer.

> Restructuring: AI often helps me see when my writing is too wordy.

> In the days before AI, I often ended up with super long articles that did not read well

> and that I did not publish. Models like o1 are very helpful in identifying things that don't need to be said.

> Writing Notes and finding key points: Here, I ask the AI to read through

> a draft “like a Computer Science 101 student” and take notes.

> This helps me see if what it absorbed matches what I intended to convey.

> Roast my Article: I have a few prompts that asks the AI to “roast” or criticize my article,

> as if commenting on Reddit, Twitter, or Hacker News. Even though these critiques seem shallow,

> they can sting, and they often highlight weaknesses in my argument or lack of clarity.

> Even if they don't necessarily impact the writing, they prime me for some of the feedback I inevitably receive.

> Identifying jargon: If I worry there's too much jargon, I use AI to resolve acronyms

> and point out technical terms I've used without explanation, helping me make the text more accessible.

I find three use cases particularly helpful:

(1) writing notes and identifying key points,

(2) having my article critiqued, and

(3) identifying jargon.

Writing notes and identifying key points: This approach provides valuable feedback on

your article by placing the LLM in the reader’s shoes.

**Talking to Her**

> ChatGPT is also incredibly helpful when having to work with multiple languages.

> For a recent example, my kids have Greek friends and we tried to understand the

> difference between some Greek words that came up. I have no idea how to write it,

> Google translate does not understand my attempts of pronouncing them either. However,

> ChatGPT does. If I ask it in voice mode what “pa-me-spee-tee”

> in Greek means it knows what I tried to mumble and replies in a helpful manner.

Lately, I’ve been thinking about improving my pronunciation

of English words using LLMs. For context, I grew up in Tamil Nadu,

in southern India, and I speak with a thick accent. I’ve often had to

repeat myself multiple times due to my pronunciation. I hate it when my

jokes fall flat because of it. Now, it’s time to experiment with LLMs to improve this.

**Final Thoughts**

> My approach isn't about outsourcing thinking, but augmenting it: using LLMs to accelerate grunt work,

> untangle mental knots, and prototype ideas faster. Skepticism is healthy, but dismissing AI outright

> risks missing its potential as a multiplier for those willing to engage critically.

I like the usage of the word, `augmenting`, feels and fits apt.

### [Building personal software with Claude](https://blog.nelhage.com/post/personal-software-with-claude/) by Nelson Elhage

**Working between defined interfaces**

> When working with Claude, I found myself instinctively choosing to break down problems into ones

> with relatively well-defined and testable interfaces. For instance, instead of asking it to make

> changes to the Rust and elisp code in one query, I would ask for a feature to be added to the Rust side,

> which I would then spot-check by inspecting the output JSON, and then ask for the corresponding elisp changes.

This is something I do often in code base, where when I don't like certain pieces of bigger task,

I ask LLM to do A, B, C task separately. Example: Writing a long SQL query. I do this out of habit of

iterative developing the pieces and finally plugging all the components(also writing the most exciting stuff first!)

The entire post covers how the author fixed a performance issue with emacs lisp function that was interacting with [obsidian.md](https://obsidian.md/).

It's fanatastic plug for using LLM and it's coding capability.

### Conclusion

- I enjoyed reading all these articles especially how everyone perceives, utilises the LLM's power to improve

the quality of their work and life.

- One thing that's clear is to get real value out of LLM, you're curious and invest enough time to learn. Then you seek rewards regularly.

- The four blog posts had four different approaches.

- Addy's use case of using cline to do complex engineering tasks was backed by solid thoughts and example cases.

- Nicholas' had exhaustive use cases listed and detailed experiments from a scientist's lab.

The breadth of usage was astonishing and results were too.

- Armin's usecase was personal and technical experiences. Armin also delves the use case how his kids use LLM,

that reminds LLM has utilitarian value for everyone.

- Nelson's post was clear show case of using LLM to fix performance issue. I remember playing with [ChatGPT](https://kracekumar.com/post/chatgpt-gh-profile-lookup/)

for coding task back in 2022. In the last 2 years, a significant improvement in LLM is clearly visible and expect to see

more such cases of LLM in improving quality of code.

**Disclaimer**: The post is not a slop(no sumamrization) but LLM was used to improve the grammar.

This is something I often do in my codebase. When I don’t like certain parts of a larger task, I ask the LLM to handle tasks A, B, and C separately. For example, when writing a long SQL query, I iteratively develop smaller pieces and then combine them—often starting with the most exciting parts first!

The post details how the author fixed a performance issue with an Emacs Lisp function that interacted with [Obsidian.md](https://obsidian.md/).

It’s a fantastic showcase of using LLMs and their coding capabilities.

### Conclusion

I enjoyed reading all these articles, especially seeing how everyone perceives and utilises the power of LLMs to improve their work and life.

One thing is clear: to get real value out of LLMs, you need curiosity and a willingness to invest time in learning. The rewards come with consistent effort.

Each of the four blog posts took a unique approach:

- Addy’s use case of using Cline for complex engineering tasks was backed by solid reasoning and practical examples.

- Nicholas provided an exhaustive list of use cases and detailed experiments, showcasing the breadth of LLM applications and their impressive results.

- Armin shared personal and technical experiences, including how his kids use LLMs—highlighting their utilitarian value for everyone.

- Nelson’s post was a clear demonstration of using LLMs to fix performance issues. It reminded me of my own experiments with [ChatGPT](https://kracekumar.com/post/chatgpt-gh-profile-lookup/) for coding tasks back in 2022. Over the past two years, significant improvements in LLMs have become evident, and I expect to see even more cases of LLMs enhancing code quality.

**Disclaimer**: This post is not AI-generated slop (no summarization), but an LLM was used to improve grammar.

- [DeepSeek R1 Aider Benchmark](https://kracekumar.com/post/deepseek_r1_aider_benchmark/index.md): DeepSeek R1 Aider Benchmark

---

title: "DeepSeek R1 Aider Benchmark"

date: 2025-01-26T00:52:45+05:30

draft: false

tags: ["LLM", "benchmark", "aider"]

---

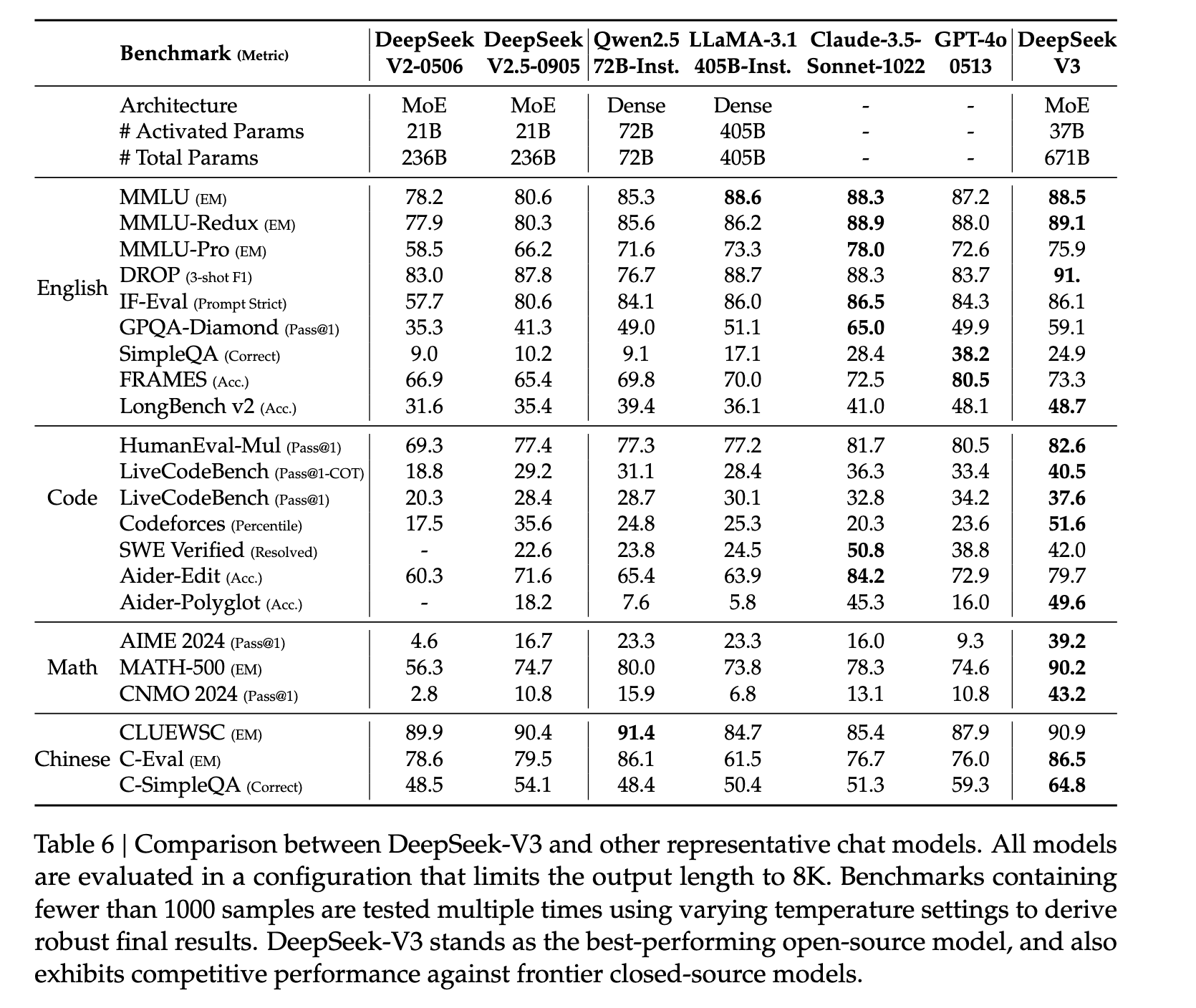

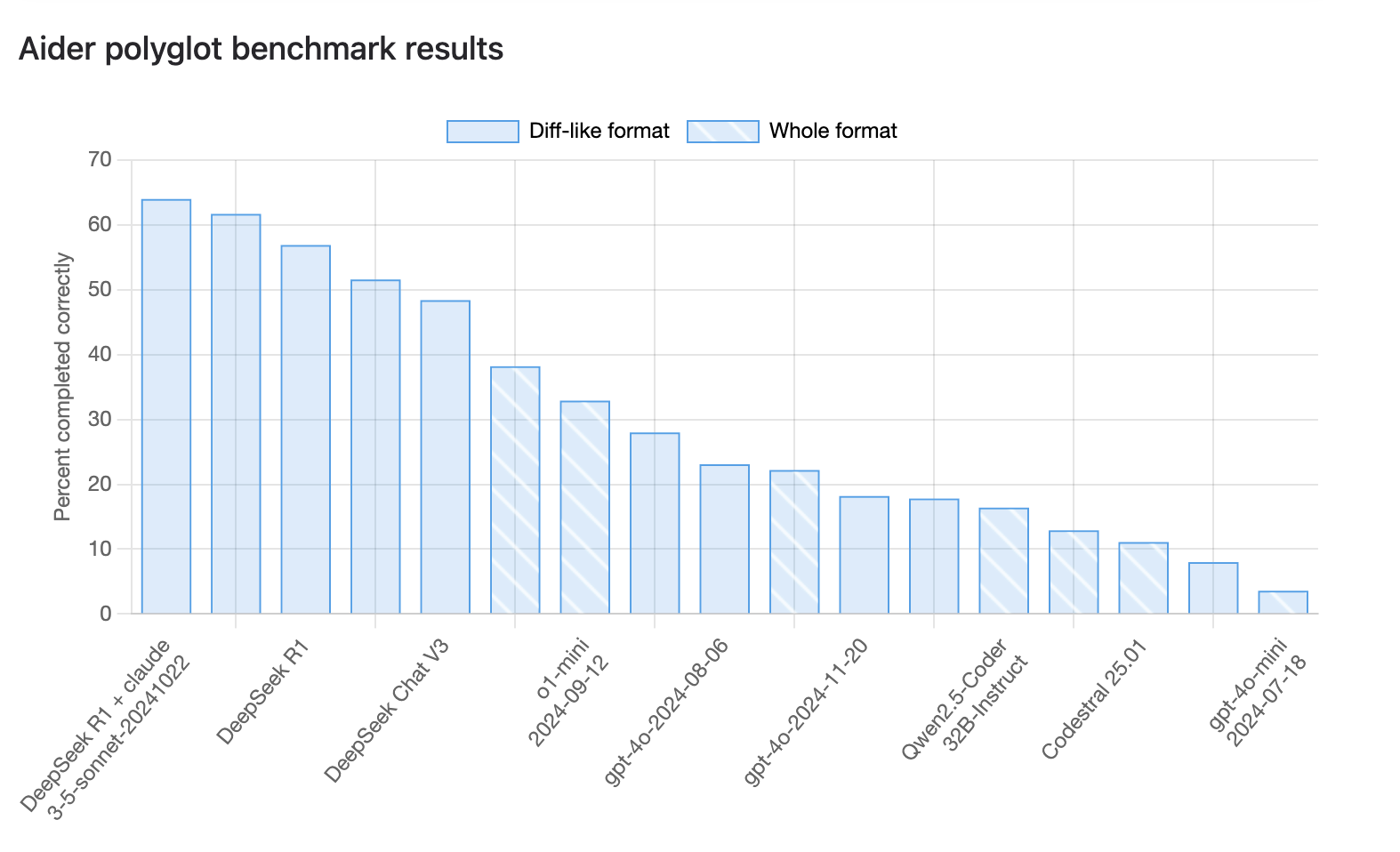

[DeepSeek recently released its R1 model](https://github.com/deepseek-ai/DeepSeek-R1/blob/main/DeepSeek_R1.pdf), a state-of-the-art LLM that outperforms all available reasoning models on the market.

The accompanying paper includes a comprehensive comparison across 21 benchmarks in four categories:

`English, Code, Math, and Chinese` .

As a software engineer, I was particularly curious about the Code category and

decided to explore the datasets and evaluation criteria.

While many benchmarks in this category were either poorly documented or required extensive dataset downloads.

Aider-polyglot stood out for its clear documentation and ease of use, [benchmark script](https://github.com/Aider-AI/aider/blob/main/benchmark/benchmark.py)

### What is Aider?

The benchmark is based on programming problems from exercism.io and covers six popular languages: `Python, Java, JavaScript, C++, Rust, and Go`.

The [README](https://github.com/Aider-AI/aider/blob/main/benchmark/README.md) provides step-by-step instructions for running the benchmarks,

making it accessible even for those new to the AI.

### Running the benchmark

Set the `DEEPSEEK_API_KEY` while running the benchmark command.

I used the hosted version of DeepSeek to run the benchmark.

Here’s the command I executed for the Python benchmarks:

```bash

$ ./benchmark/benchmark.py test-deepseek-r1-run --model r1 --edit-format whole --threads 10 --exercises-dir polyglot-benchmark --verbose --new --languages python

```

**Key CLI Parameters:**

- `model: r1` (indicating the DeepSeek R1 model).

- `edit-format: whole` (the other option is edit, which was used in the original paper).

- `threads: 10` (number of Python threads to run in parallel).

- `languages`: python (by default, all languages are benchmarked).

**Output:**

```bash

- dirname: 2025-01-25-19-03-46--test-deepseek-r1-run

test_cases: 34

model: deepseek/deepseek-reasoner

edit_format: whole

commit_hash: b276d48

pass_rate_1: 35.3

pass_rate_2: 64.7

pass_num_1: 12

pass_num_2: 22

percent_cases_well_formed: 100.0

error_outputs: 0

num_malformed_responses: 0

num_with_malformed_responses: 0

user_asks: 0

lazy_comments: 0

syntax_errors: 0

indentation_errors: 0

exhausted_context_windows: 0

test_timeouts: 1

total_tests: 225

command: aider --model deepseek/deepseek-reasoner

date: 2025-01-25

versions: 0.72.3.dev

seconds_per_case: 226.0

total_cost: 0.9313

costs: $0.0274/test-case, $0.93 total, $6.16 projected

```

Most fields are self-explanatory, but two key metrics stand out: `pass_rate_1` and `pass_rate_2`,

which indicate the percentage of problems solved on the first and second attempts, respectively.

The R1 model achieved a `64.7%` pass rate across 34 exercises.

From the [official leaderboard](https://aider.chat/2024/12/21/polyglot.html) the pass rate of `56.9%` across langauges.

This is not like to like comparision but for illustrative purpose.

Notably, the official website does not distinguish between pass rates for the first and second attempts.

### Conclusion

During the benchmark, I encountered a temporary issue where the DeepSeek API returned a 503 error.

While Aider employs exponential backoff to retry failed exercises, recovery can be time-consuming.

Following are some of the results from other language benchmarks except Java.

### C++

```

$./benchmark/benchmark.py test-deepseek-r1-run-cpp --model r1 --edit-format whole --threads 10 --exercises-dir polyglot-benchmark --verbose --new --languages cpp

- dirname: 2025-01-25-19-26-20--test-deepseek-r1-run-cpp

test_cases: 26

model: deepseek/deepseek-reasoner

edit_format: whole

commit_hash: b276d48

pass_rate_1: 19.2

pass_rate_2: 69.2

pass_num_1: 5

pass_num_2: 18

percent_cases_well_formed: 100.0

error_outputs: 0

num_malformed_responses: 0

num_with_malformed_responses: 0

user_asks: 0

lazy_comments: 0

syntax_errors: 0

indentation_errors: 0

exhausted_context_windows: 0

test_timeouts: 0

total_tests: 225

command: aider --model deepseek/deepseek-reasoner

date: 2025-01-25

versions: 0.72.3.dev

seconds_per_case: 410.2

total_cost: 0.4168

costs: $0.0160/test-case, $0.42 total, $3.61 projected

```

### Go

```

$./benchmark/benchmark.py test-deepseek-r1-run-go --model r1 --edit-format whole --threads 10 --exercises-dir polyglot-benchmark --verbose --new --languages go

rname: 2025-01-26-07-44-16--test-deepseek-r1-run-go

test_cases: 39

model: deepseek/deepseek-reasoner

edit_format: whole

commit_hash: b276d48

pass_rate_1: 41.0

pass_rate_2: 66.7

pass_num_1: 16

pass_num_2: 26

percent_cases_well_formed: 100.0

error_outputs: 0

num_malformed_responses: 0

num_with_malformed_responses: 0

user_asks: 3

lazy_comments: 0

syntax_errors: 0

indentation_errors: 0

exhausted_context_windows: 0

test_timeouts: 1

total_tests: 225

command: aider --model deepseek/deepseek-reasoner

date: 2025-01-26

versions: 0.72.3.dev

seconds_per_case: 204.4

total_cost: 0.8196

costs: $0.0210/test-case, $0.82 total, $4.73 projected

```

### Javascript

```

./benchmark/benchmark.py test-deepseek-r1-run-javascript --model r1 --edit-format whole --threads 10 --exercises-dir polyglot-benchmark --verbose --languages javascript --new

- dirname: 2025-01-26-14-52-31--test-deepseek-r1-run-javascript

test_cases: 49

model: deepseek/deepseek-reasoner

edit_format: whole

commit_hash: b276d48

pass_rate_1: 22.4

pass_rate_2: 57.1

pass_num_1: 11

pass_num_2: 28

percent_cases_well_formed: 100.0

error_outputs: 0

num_malformed_responses: 0

num_with_malformed_responses: 0

user_asks: 2

lazy_comments: 0

syntax_errors: 0

indentation_errors: 0

exhausted_context_windows: 0

test_timeouts: 1

total_tests: 225

command: aider --model deepseek/deepseek-reasoner

date: 2025-01-26

versions: 0.72.3.dev

seconds_per_case: 236.6

total_cost: 1.2589

costs: $0.0257/test-case, $1.26 total, $5.78 projected

```

### Rust

```

./benchmark/benchmark.py test-deepseek-r1-run-rust --model r1 --edit-format whole --threads 10 --exercises-dir polyglot-benchmark --verbose --languages rust --new

- dirname: 2025-01-26-15-18-05--test-deepseek-r1-run-rust

test_cases: 30

model: deepseek/deepseek-reasoner

edit_format: whole

commit_hash: b276d48

pass_rate_1: 50.0

pass_rate_2: 63.3

pass_num_1: 15

pass_num_2: 19

percent_cases_well_formed: 100.0

error_outputs: 0

num_malformed_responses: 0

num_with_malformed_responses: 0

user_asks: 3

lazy_comments: 0

syntax_errors: 0

indentation_errors: 0

exhausted_context_windows: 0

test_timeouts: 0

total_tests: 225

command: aider --model deepseek/deepseek-reasoner

date: 2025-01-26

versions: 0.72.3.dev

seconds_per_case: 174.1

total_cost: 0.7162

costs: $0.0239/test-case, $0.72 total, $5.37 projected

```

- [ndjson](https://kracekumar.com/post/ndjson/index.md): ndjson

---

title: ndjson

date: 2025-01-14T23:16:25+05:30

draft: false

tags:

- HTTP

- TIL

---

```python

curl http://localhost:11434/api/generate -d '{

"model": "llama3.2",

"prompt": "Where is Dublin? Answer in a six words"

}'

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.15898Z","response":"Located","done":false}

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.183229Z","response":" on","done":false}

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.206942Z","response":" the","done":false}

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.230918Z","response":" east","done":false}

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.254533Z","response":" coast","done":false}

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.278113Z","response":" Ireland","done":false}

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.301689Z","response":".","done":false}

{"model":"llama3.2","created_at":"2025-01-14T17:48:33.3255Z","response":"","done":true,"done_reason":"stop","context":[128006,9125,128007,271,38766,1303,33025,2696,25,6790,220,2366,18,271,128009,128006,882,128007,271,9241,374,33977,30,22559,304,264,4848,4339,128009,128006,78191,128007,271,48852,389,279,11226,13962,14990,13],"total_duration":2392671125,"load_duration":575523041,"prompt_eval_count":34,"prompt_eval_duration":1649000000,"eval_count":8,"eval_duration":167000000}

```

I was playing around with ollama API to explore the API capabilities and noticed the HTTP response was streaming JSON that prompted me to look into the response headers.

```python

curl -v http://localhost:11434/api/generate -d '{

"model": "llama3.2",

"prompt": "Where is Dublin? Answer in a six words"

}'

* Host localhost:11434 was resolved.

* IPv6: ::1

* IPv4: 127.0.0.1

* Trying [::1]:11434...

* connect to ::1 port 11434 from ::1 port 49217 failed: Connection refused

* Trying 127.0.0.1:11434...

* Connected to localhost (127.0.0.1) port 11434

> POST /api/generate HTTP/1.1

> Host: localhost:11434

> User-Agent: curl/8.7.1

> Accept: */*

> Content-Length: 250

> Content-Type: application/x-www-form-urlencoded

>

* upload completely sent off: 250 bytes

< HTTP/1.1 200 OK

< Content-Type: application/x-ndjson

< Date: Tue, 14 Jan 2025 17:49:29 GMT

< Transfer-Encoding: chunked

<

...

```

The content type is `application/x-ndjson` and quick search hinted it's a new line separated JSON that can be used in streaming protocols. Also the `Transfer-Encoding`is chunked and fits well with for LLM responses over the wire.

```python

curl http://localhost:11434/api/generate -d '{

"model": "llama3.2",

"prompt": "Where is Dublin? Answer in a six words"

}' | jq .response

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1399 0 1149 100 250 3291 716 --:--:-- --:--:-- --:--:-- 3997

"Located"

" on"

" the"

" east"

" coast"

" Ireland"

"."

""

```

Also `jq`could handle new line delimited json.

### JSON Streaming formats

While researching further on [JSON streaming](https://en.wikipedia.org/wiki/JSON_streaming) there are several other approaches to stream JSON objects. Notable ones are `ndjson, jsonl, json-seq`. All these formats are useful for processing and parallelising large JSON objects without loading entire dataset into the memory.

**Syntax**

- `ndjson`: Uses a newline character (`\n`) to separate each JSON object, and no whitespace is allowed between objects or values. Example: `{"some":"thing\n"}`. Only single `\n`

- `jsonl` ([JSON Lines](https://jsonlines.org/on_the_web/)): Similar to `ndjson`, but allows for optional whitespace around the `\n` separator and `\r\n` in windows. Example: `{"name": "John", "age": 30}\r\n`

- `json-seq` (JSON Sequence): Each JSON object prefixed by an ASCII Record Separator (0x1E), and each ending with an ASCII Line Feed character (0x0A). Example: `␞{"d":"2014-09-22T21:58:35.270Z","value":6}`

It's quite interesting to see the different use cases of different variations of JSON formats.

- [Subtitle Generator Using Whisper](https://kracekumar.com/post/subtitle-generator-using-whisper/index.md): Subtitle Generator Using Whisper

---

title: Subtitle Generator Using Whisper

date: 2025-01-12T22:30:34+05:30

draft: false

tags:

- AI

- language_model

---

I want to generate the subtitles for the `Normal People`TV series in my laptop using LLM. After searching a bit, whisper from OpenAI was a proper fit.

### Step 1: Extracting Audio from Video

The first step is to extract the audio from the video file using `ffmpeg` and store it separately.

```python

ffmpeg -i /Users/kracekumar/Movies/TV/Normal.People.S01/Normal.People.S01E01.mp4 -vn -acodec copy /Users/kracekumar/Movies/TV/Normal.People.S01/audio/Normal.People.S01E01.aac

```

### Step 2: Converting Audio to Text

The second step is to run the audio file through the [whisper model](https://github.com/openai/whisper) from OpenAI. I use uv to install and run inside a project.

```python

uv run whisper /Users/kracekumar/Movies/TV/Normal.People.S01/audio/Normal.People.S01E01.aac --model turbo -f srt --output_dir /Users/kracekumar/Movies/TV/Normal.People.S01/generated_subs/

```

Here is the first ten subtitles generated from the model

```

1

00:00:00,000 --> 00:00:24,000

It's a simple game. You have 15 players. Give one of them the ball. Get it into the net.

2

00:00:24,000 --> 00:00:26,000

Very simple. Isn't it?

3

00:00:26,000 --> 00:00:31,000

Brilliant. How's it going, Rachel? Talking tactics there for the big game.

4

00:00:31,000 --> 00:00:35,000

We're getting a masterclass. How incredibly boring of you.

5

00:00:35,000 --> 00:00:39,000

Yeah. Did you use your hair though? I did, yeah.

6

00:00:39,000 --> 00:00:44,000

It's very pretty. Thanks. Can I use my locker? By any chance?

7

00:00:44,000 --> 00:00:50,000

Yeah. Yeah, I sorta need you to move, Connell.

8

00:00:50,000 --> 00:00:55,000

Oh, sorry. Excuse me. Sorry. Excuse me. Right, relax, will ya?

9

00:00:55,000 --> 00:01:00,000

Okay, now that's important because it's turned up in the exam twice out of the last three years.

10

00:01:02,000 --> 00:01:03,000

Marianne.

```

Here is subtitles from the other site

```

1

00:00:18,989 --> 00:00:20,269

It's a simple game.

2

00:00:20,320 --> 00:00:22,642

You have 15 players.

Give one of them the ball,

3

00:00:22,693 --> 00:00:24,048

get it into the net.

4

00:00:24,099 --> 00:00:25,708

- Very simple.

- Isn't it?

5

00:00:26,052 --> 00:00:27,192

Oh, what?

6

00:00:27,415 --> 00:00:28,535

Brilliant.

7

00:00:28,833 --> 00:00:31,520

How's it going, Rachel?

Talking tactics, there, for the game.

8

00:00:31,571 --> 00:00:33,200

We're getting a master class.

9

00:00:33,598 --> 00:00:35,965

- How incredibly boring of you.

- Yeah.

10

00:00:36,601 --> 00:00:38,570

- Did you get your hair done?

- I did, yeah.

```

The complete generated subtitles can be found in [gist](https://gist.github.com/kracekumar/efe9da9ea0d13e42b10f1fc7eaad5c50)

### Comparison with original subtitles

The LLM produced close to perfect subtitles in terms of text and highly useful with certain annoying behaviours

1. **Text appears before characters starts to speak**: The first generated text appears as soon a video starts whereas in the original file, starts at 18th second. When there is a long pause in the video, the next dialogue appears immediately.

2. **Subtitle Length**: The first subtitle, `It's a simple game. You have 15 players. Give one of them the ball. Get it into the net.`is longer and represents 6 seconds of dialogue. The splitting these into multiple sequences would be useful especially for movie subtitles but may not matter for speech to text.(couldn't find any CLI options)

3. **Inconsistent Punctuation**: While some generated text includes proper punctuation, other sections lack it. The CLI offers `--append_punctuations, --prepend_punctations` to address this, but I haven't tried.

### Script to bulk convert

```python

import os

import subprocess

import argparse

def extract_audio(input_dir, output_dir):

"""

Extracts audio from video files in the input directory and saves them to the output directory.

Args:

input_dir: Path to the directory containing video files.

output_dir: Path to the directory where audio files will be saved.

"""

if not os.path.exists(output_dir):

os.makedirs(output_dir)

for filename in os.listdir(input_dir):

if filename.endswith(('.mp4', '.avi', '.mov')): # Add more video extensions if needed

input_path = os.path.join(input_dir, filename)

output_path = os.path.join(output_dir, os.path.splitext(filename)[0] + '.aac')

command = f"ffmpeg -i {input_path} -vn -acodec copy {output_path}"

# Add logging to track

print(f"Running the command: {command}")

subprocess.run(command, shell=True)

def generate_subtitles(input_dir, output_dir):

"""

Generates subtitles for audio files using the Whisper LLM model.

Args:

input_dir: Path to the directory containing audio files.

output_dir: Path to the directory where subtitle files will be saved.

"""

if not os.path.exists(output_dir):

os.makedirs(output_dir)

for filename in os.listdir(input_dir):

if filename.endswith(('.aac', '.wav')):

input_path = os.path.join(input_dir, filename)

command = f"whisper {input_path} --model turbo -f srt --output_dir {output_dir}"

# Adjust model size ('tiny', 'base', 'small', 'medium', 'large') as needed

print(f"Running the command: {command}")

subprocess.run(command, shell=True)

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Extract audio and generate subtitles.")

parser.add_argument("input_dir", help="Path to the directory containing video files.")

parser.add_argument("audio_dir", help="Path to the directory to save extracted audio files.")

parser.add_argument("subtitle_dir", help="Path to the directory to save generated subtitles.")

args = parser.parse_args()

extract_audio(args.input_dir, args.audio_dir)

generate_subtitles(args.audio_dir, args.subtitle_dir)

```

I asked Gemini to generate the code for the task. The following is the code that was generated by the model. The prompt was basic and as follows

```

Write a Python program that takes a command line arguments to do following tasks

1) Get a directory that contains video files and extracts the audio from the video and stores the audio in a separate directory using ffmpeg command. If the output directory is missing, new directory should be created.

2) Then audio file is passed on to the command of whisper llm model to produce the sub ttitles for the audio file. The output should be stored in a new directory

```

From the generated code, I modified two things

1) The command line arguments to ffmpeg and whisper command

2) Add a log line to print the current command to track progress.

### Chinese language

After successful english subtitles generation, I was tempted to try non-english audio. for `In the mood for love` movie. The whisper model failed to convert the generated chinese translation to English.

```python

$uv run whisper /Users/kracekumar/Movies/In.the.Mood.for.Love/audio/In.the.Mood.for.Love.mp4 --model turbo -f srt --output_dir /Users/kracekumar/Movies/In.the.Mood.for.Love/generated_sub --language zh --task translate

[00:00.000 --> 00:00.180]

[00:30.000 --> 00:30.180]

[01:00.000 --> 01:00.160] The frustrating is for thoseatks. It's beautiful and adorable and significant. It's adorable and typical. There's a blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank blank

[01:30.000 --> 01:30.180]

[02:00.000 --> 02:05.200] 謝謝你, 那我先走了

[02:05.260 --> 02:06.680] of息, 再見

[02:07.800 --> 02:11.360] 請問你們有嗎?

[02:11.520 --> 02:15.200] 對不起, 房間剛剛租給一位太太

[02:15.520 --> 02:16.520] 謝謝你

...

[01:28:35.420 --> 01:28:36.420] 謝謝

[01:28:36.420 --> 01:28:37.420]

Traceback (most recent call last):

File "/Users/kracekumar/code/s2t/.venv/lib/python3.12/site-packages/whisper/transcribe.py", line 598, in cli

writer(result, audio_path, **writer_args)

File "/Users/kracekumar/code/s2t/.venv/lib/python3.12/site-packages/whisper/utils.py", line 101, in __call__

self.write_result(result, file=f, options=options, **kwargs)

File "/Users/kracekumar/code/s2t/.venv/lib/python3.12/site-packages/whisper/utils.py", line 257, in write_result

for i, (start, end, text) in enumerate(

^^^^^^^^^^

File "/Users/kracekumar/code/s2t/.venv/lib/python3.12/site-packages/whisper/utils.py", line 197, in iterate_result

for subtitle in iterate_subtitles():

^^^^^^^^^^^^^^^^^^^

File "/Users/kracekumar/code/s2t/.venv/lib/python3.12/site-packages/whisper/utils.py", line 147, in iterate_subtitles

last: float = get_start(result["segments"]) or 0.0

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/kracekumar/code/s2t/.venv/lib/python3.12/site-packages/whisper/utils.py", line 72, in get_start

return next(

^^^^^

File "/Users/kracekumar/code/s2t/.venv/lib/python3.12/site-packages/whisper/utils.py", line 73, in <genexpr>

(w["start"] for s in segments for w in s["words"]),

~^^^^^^^^^

KeyError: 'words'

```

### Conclusion

Whisper model was able to provide useable and close to accurate subtitles for the audio. There are a lot of rough edges like producing long text without proper truncation that hampers the experience. I'm pretty sure, there are tweaks to get the perfect subtitles with enough effort.

- [Open-webui in personal laptop](https://kracekumar.com/post/open-webui-in-personal-laptop/index.md): Open-webui in personal laptop

---

title: Open-webui in personal laptop

date: 2024-12-31T23:00:05+05:30

draft: false

tags:

- AI

- chatgpt

- language_model

---

In 2024, Large Language Models (LLMs) and Generative AI(GenAI) exploded at an unimaginable rate. I didn't follow the trend. Currently, there is a news every day on new models. Also, the explosion of models reached a stage where local MacBooks can run a decent enough model. I want to have local model with a decent UI support through web or terminal that provides clean user interface.

I stumbled upon [open-webui](https://github.com/open-webui/open-webui).

>Open WebUI is an [extensible](https://github.com/open-webui/pipelines), feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our [Open WebUI Documentation](https://docs.openwebui.com/).

I have previously tried [llm python package](https://github.com/simonw/llm) to try out stand alone models.

### Installing open-webui

LLM package was setup using `uv` and `python` 3.12. Adding open-webui to the existing package failed because of `ctranslate` version compatability. So I had to run the LLM package and open-webui in Python 3.11 version. After installing open-webui, I expected it to pick up llama model from llm package installation in `~/Library/Application\ Support/io.datasette.llm/`. That didn't work. So I installed ollama mac package with [llama 3.2 model](https://ollama.com/library/llama3.2).

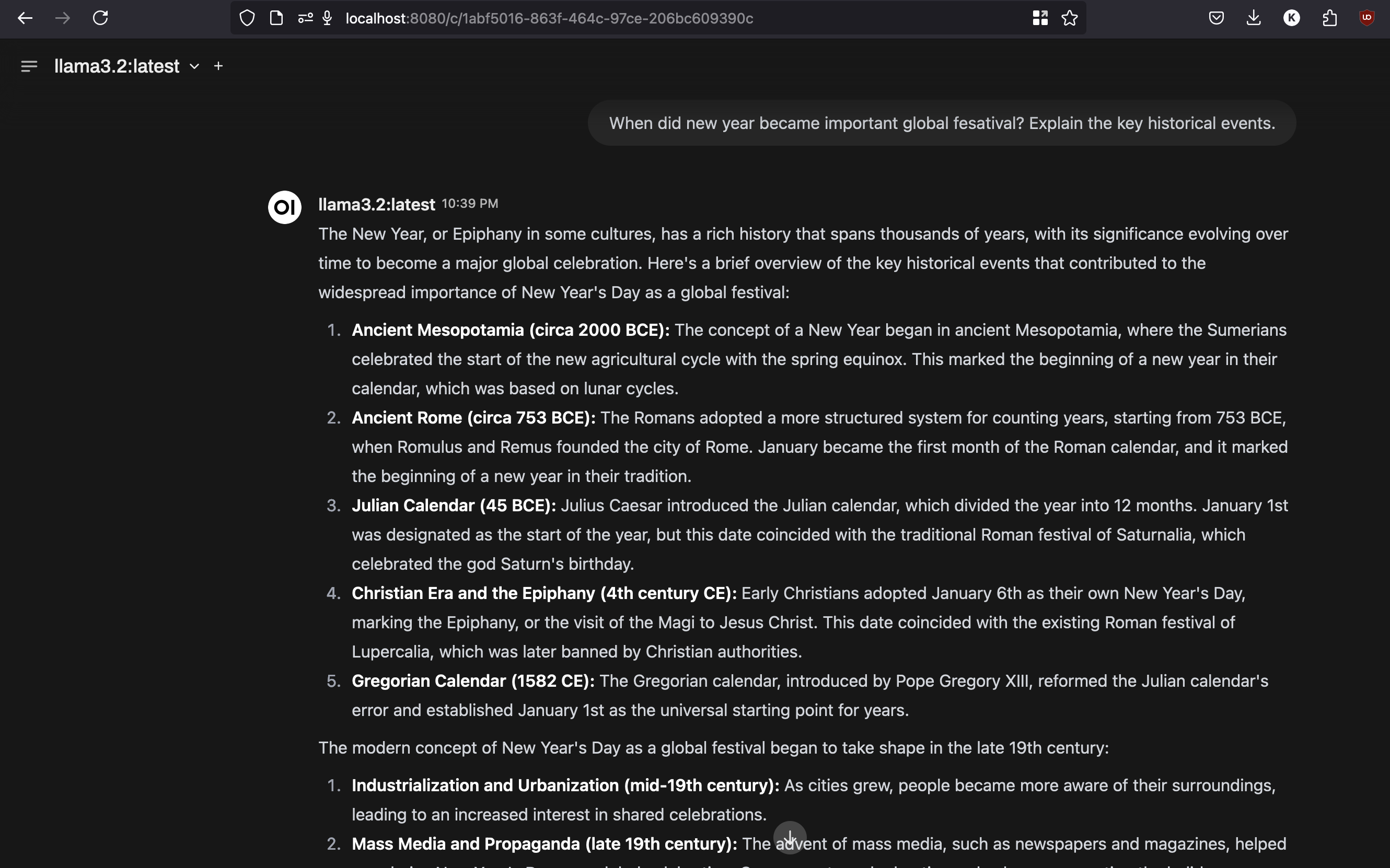

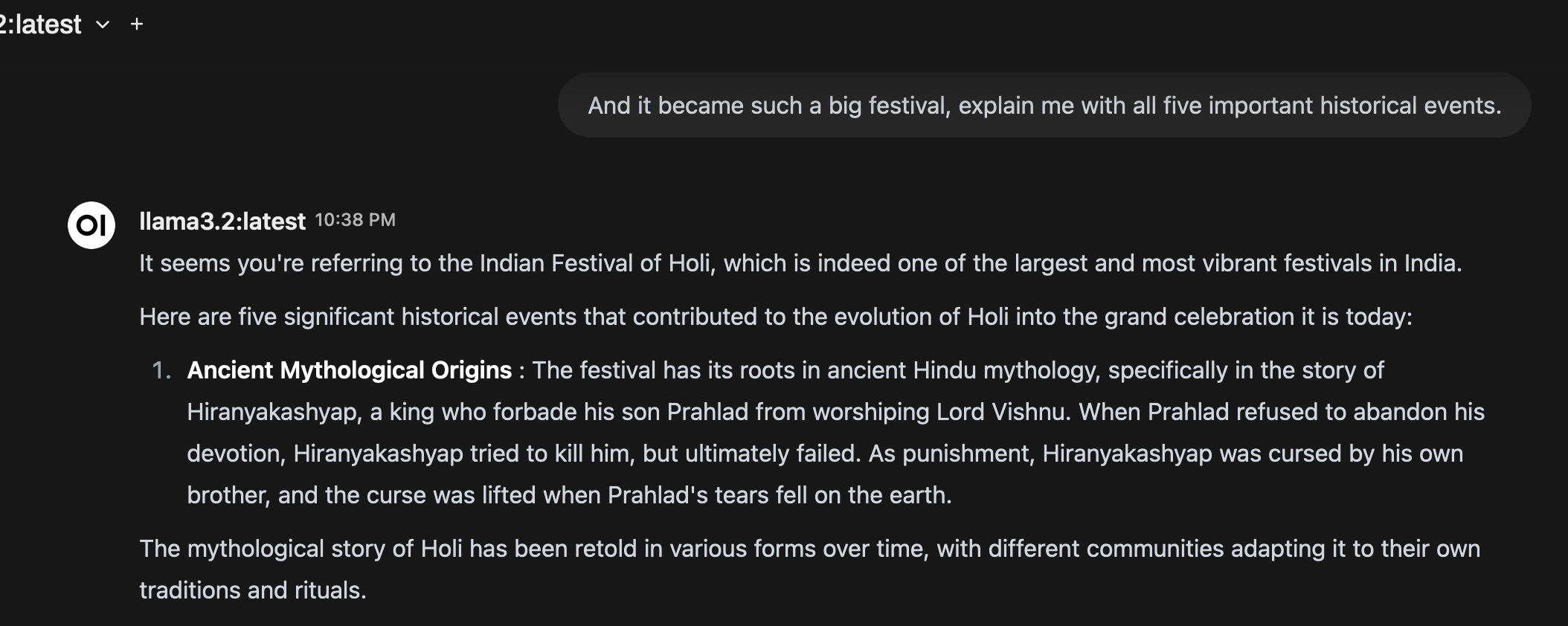

Then the open-webui picked up the model (see the top left corner of the image) without making any changes. I used simple `uv run open-webui serve` to run openwebui in the local machine.

I tried out the a simple question, `When did new year became important global fesatival? Explain the key historical events.`

Here is the answer

The interface looks similar to ChatGPT and usable for long chat.

The voice to text translation was sub-par in the home page, I asked, `explain the beginning of new year and major historical events around it`. The translation was out right wrong and considered new year as holi festival. It skipped first part of the voice message.

- [Chatgpt Generate Ruby Code to Check User Exists in Github](https://kracekumar.com/post/chatgpt-gh-profile-lookup/index.md): Chatgpt Generate Ruby Code to Check User Exists in Github

---

title: "Chatgpt Generate Ruby Code to Check User Exists in Github"

date: 2022-12-04T21:30:15+00:00

draft: false

tags: ["chatgpt", "AI", "language_model"]

---

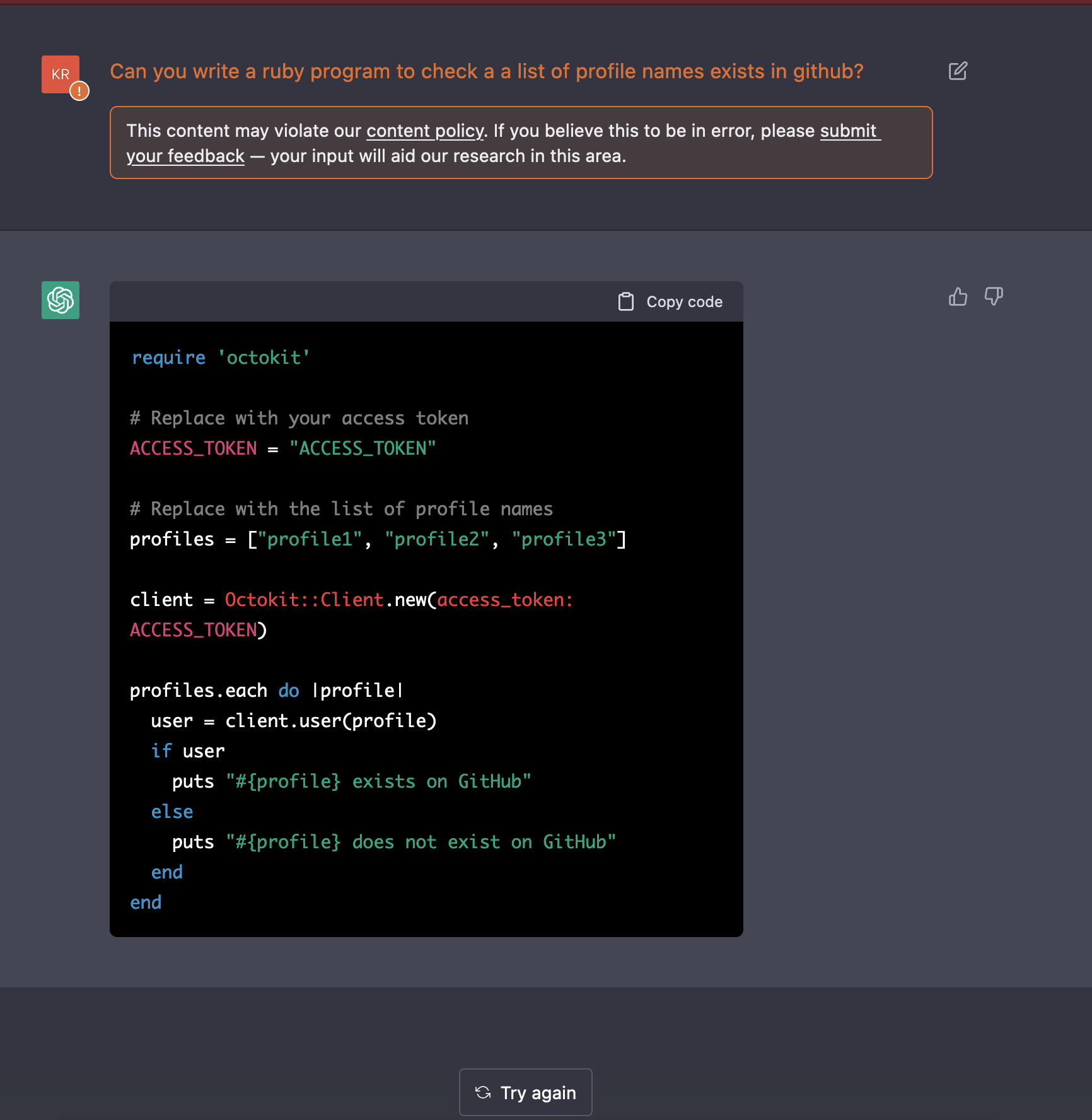

On saturday night, I want to work on a side project. To find a name for the project and I wanted to create an Github organization in the same name. I started trying out the name one after the other, all the names were taken and thought about writing small script in ruby. Then from nowhere I decided to let chatgpt to write the code for me.

In this blog post, I'll share some of the code generated by chatgpt for the checking whether a profile name exists in the Github with increasing complexity.

[You can signup for chatgpt from openai site.](https://chat.openai.com/chat)

# Case 1: Simple case

**Prompt**

```

Can you write a ruby program to check a a list of profile names exists in github?

```

**Generated Code**

```ruby

require 'octokit'

# Replace with your access token

ACCESS_TOKEN = "ACCESS_TOKEN"

# Replace with the list of profile names

profiles = ["profile1", "profile2", "profile3"]

client = Octokit::Client.new(access_token: ACCESS_TOKEN)

profiles.each do |profile|

user = client.user(profile)

if user

puts "#{profile} exists on GitHub"

else

puts "#{profile} does not exist on GitHub"

end

end

```

The code looks legit and may run, but there are two issues

- There is no instruction to install `octokit`.

- Not reading the secret from the environment variable is a bad sign.

Let's enhance the instruction by explicitly stating it.

**Prompt**

```

Can you write a ruby program to check a a list of profile names exists in github?

If you use external gem, specify the installation instruction. Also read secrets from environment variable.

```

**Generated code**

```ruby

require 'octokit'

# Replace with the list of profile names

profiles = ["profile1", "profile2", "profile3"]

# Read access token from environment variable

access_token = ENV["ACCESS_TOKEN"]

client = Octokit::Client.new(access_token: access_token)

profiles.each do |profile|

user = client.user(profile)

if user

puts "#{profile} exists on GitHub"

else

puts "#{profile} does not exist on GitHub"

end

end

```

Along with the code, there is an installation line, `To install the octokit gem, run gem install octokit in your terminal.`

**Output**

```

$ ruby case_1.rb

To use retry middleware with Faraday v2.0+, install `faraday-retry` gem

profile1 exists on GitHub

profile2 exists on GitHub

profile3 exists on GitHub

```

The code actually works!

Now let's slightly modify the requirements to read list of profile names from a file.

# Case 2: Read list of profile names from a file, check the profiles and write to JSON file

**Prompt**

```

Can you write a ruby program to read a list of github profile from a text file,

check each profile exists in github and write the result to a JSON file?

If you use external gem, specify the installation instruction. Also read secrets from environment variable.

```

**Generated code**

```ruby

require 'octokit'

require 'json'

# Read profile names from text file

profiles = File.readlines("profiles.txt").map(&:chomp)

# Read access token from environment variable

access_token = ENV["ACCESS_TOKEN"]

client = Octokit::Client.new(access_token: access_token)

# Create a new hash to store the result

result = {}

profiles.each do |profile|

user = client.user(profile)

if user

result[profile] = true

else

result[profile] = false

end

end

# Write the result to a JSON file

File.open("result.json","w") do |f|

f.write(result.to_json)

end

```

**Output**

```

$cat profiles.txt

kracekumar

zzzzzz

404

$ruby case_2.rb

To use retry middleware with Faraday v2.0+, install `faraday-retry` gem

Traceback (most recent call last):

15: from case_2.rb:15:in `<main>'

14: from case_2.rb:15:in `each'

13: from case_2.rb:16:in `block in <main>'

12: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/octokit-6.0.1/lib/octokit/client/users.rb:34:in `user'

11: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/octokit-6.0.1/lib/octokit/connection.rb:19:in `get'

10: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/octokit-6.0.1/lib/octokit/connection.rb:156:in `request'

9: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/sawyer-0.9.2/lib/sawyer/agent.rb:99:in `call'

8: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/faraday-2.7.1/lib/faraday/connection.rb:200:in `get'

7: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/faraday-2.7.1/lib/faraday/connection.rb:445:in `run_request'

6: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/faraday-2.7.1/lib/faraday/rack_builder.rb:153:in `build_response'

5: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/octokit-6.0.1/lib/octokit/middleware/follow_redirects.rb:61:in `call'

4: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/octokit-6.0.1/lib/octokit/middleware/follow_redirects.rb:73:in `perform_with_redirection'

3: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/faraday-2.7.1/lib/faraday/middleware.rb:17:in `call'

2: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/faraday-2.7.1/lib/faraday/response.rb:42:in `on_complete'

1: from /Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/faraday-2.7.1/lib/faraday/middleware.rb:18:in `block in call'

/Users/krace/.rbenv/versions/2.7.2/lib/ruby/gems/2.7.0/gems/octokit-6.0.1/lib/octokit/response/raise_error.rb:14:in `on_complete': GET https://api.github.com/users/404: 404 - Not Found // See: https://docs.github.com/rest/reference/users#get-a-user (Octokit::NotFound)

```

Ah ha. the `profile` 404 returns `404` and the program fails, now enhance the prompt to modify it.

**Prompt**

```

Can you write a ruby program to read a list of github profile from a text file,

check each profile exists in github and write the result to a JSON file? The code should handle exceptions.

If you use external gem, specify the installation instruction. Also read secrets from environment variable.

```

**Generated code**

```ruby

require 'octokit'

require 'json'

# Read profile names from text file

profiles = File.readlines("profiles.txt").map(&:chomp)

# Read access token from environment variable

access_token = ENV["ACCESS_TOKEN"]

client = Octokit::Client.new(access_token: access_token)

# Create a new hash to store the result

result = {}

profiles.each do |profile|

begin

user = client.user(profile)

if user

result[profile] = true

else

result[profile] = false

end

rescue Octokit::NotFound

result[profile] = false

end

end

# Write the result to a JSON file

File.open("result.json","w") do |f|

f.write(result.to_json)

end

```

- Now the model generates the code with exception handling but only handles 404 without explicitly mentioning the specific exception in the prompt. Neat!

**Output**

```

$ruby case_2.rb

To use retry middleware with Faraday v2.0+, install `faraday-retry` gem

$cat result.json

{"kracekumar":true,"zzzzzz":true,"404":false}

```

# Case 3: Read from dictionary, check the profile in github, handle rate-limit and write the results to file.

**Prompt**

```

Can you write a ruby program to read a list of github profile from a text file in the location /usr/share/dict/words,

check each profile exists in github, check for rate limit and write the result to a JSON file?

Print result of each user check in the terminal. The code should handle exceptions.

If you use external gem, specify the installation instruction. Also read secrets from environment variable.

```

**Generated Code**

```ruby

require 'octokit'

require 'json'

# Read profile names from text file

profiles = File.readlines("/usr/share/dict/words").map(&:chomp)

# Read access token from environment variable

access_token = ENV["ACCESS_TOKEN"]

client = Octokit::Client.new(access_token: access_token)

# Create a new hash to store the result

result = {}

profiles.each do |profile|

# Check for rate limit

rate_limit = client.rate_limit

if rate_limit.remaining == 0

puts "Rate limit exceeded. Waiting for reset at #{rate_limit.reset_at}..."

sleep rate_limit.resets_in

end

begin

user = client.user(profile)

if user

result[profile] = true

puts "#{profile} exists on GitHub"

else

result[profile] = false

puts "#{profile} does not exist on GitHub"

end

rescue Octokit::NotFound

result[profile] = false

puts "#{profile} does not exist on GitHub"

end

end

# Write the result to a JSON file

File.open("result.json","w") do |f|

f.write(result.to_json)

end

```

**Output**

```

$ruby case_3.rb

To use retry middleware with Faraday v2.0+, install `faraday-retry` gem

A exists on GitHub

a exists on GitHub

aa exists on GitHub

aal exists on GitHub

...

abarthrosis does not exist on GitHub

Traceback (most recent call last):

2: from case_3.rb:15:in `<main>'

1: from case_3.rb:15:in `each'

case_3.rb:19:in `block in <main>': undefined method `reset_at' for #<Octokit::RateLimit:0x0000000107ba8760> (NoMethodError)

Did you mean? resets_at

resets_at=

```

Oops, the code breaks at line number 19 - `rate_limit.reset_at`.

Let's give feedback to the chatgpt, saying the code fails at line 19.

**Prompt**

```

the above code failed with the following error, undefined method `reset_at' for #<Octokit::RateLimit:0x000000010498f210> (NoMethodError)

Did you mean? resets_at

resets_at=

Can you fix it?

```

**Generated code**

```ruby

require 'octokit'

require 'json'

# Read profile names from text file

profiles = File.readlines("/usr/share/dict/words").map(&:chomp)

# Read access token from environment variable

access_token = ENV["ACCESS_TOKEN"]

client = Octokit::Client.new(access_token: access_token)

# Create a new hash to store the result

result = {}

profiles.each do |profile|

# Check for rate limit

rate_limit = client.rate_limit

if rate_limit.remaining == 0

puts "Rate limit exceeded. Waiting for reset at #{rate_limit.resets_at}..."

sleep rate_limit.resets_in

end

begin

user = client.user(profile)

if user

result[profile] = true

puts "#{profile} exists on GitHub"

else

result[profile] = false

puts "#{profile} does not exist on GitHub"

end

rescue Octokit::NotFound

result[profile] = false

puts "#{profile} does not exist on GitHub"

end

end

# Write the result to a JSON file

File.open("result.json","w") do |f|

f.write(result.to_json)

end

```

The model generated above code with a message,

`The error occurred because the Octokit::RateLimit object does not have a method named reset_at. The correct method is resets_at.` Great!

**Output**

```

$ruby case_3.rb

To use retry middleware with Faraday v2.0+, install `faraday-retry` gem

Rate limit exceeded. Waiting for reset at 2022-12-04 19:46:29 +0000...

```

Now the bug is fixed and worked as expected! I don't say issues. OF course! there can be all sort of exceptions that need to be handled - socket timeout, slow response time, 500s etc... Saving the results to a file after checking all profiles is problematic.

# Case 4: Let's tell the model to refactor the code into functions.

**Prompt**

```

Thanks, that fixed exception. Can you refactor the code into functions?

```

**Generated Code**

```ruby

require 'octokit'

require 'json'

# Read profile names from text file

def read_profiles

File.readlines("/usr/share/dict/words").map(&:chomp)

end

# Check if user exists on GitHub

def check_user(user)

begin

client.user(user)

true

rescue Octokit::NotFound

false

end

end

# Check for rate limit and wait if necessary

def check_rate_limit

rate_limit = client.rate_limit

if rate_limit.remaining == 0

puts "Rate limit exceeded. Waiting for reset at #{rate_limit.resets_at}..."

sleep rate_limit.resets_in

end

end

# Read access token from environment variable

access_token = ENV["ACCESS_TOKEN"]

client = Octokit::Client.new(access_token: access_token)

# Create a new hash to store the result

result = {}

profiles = read_profiles

profiles.each do |profile|

check_rate_limit

result[profile] = check_user(profile)

puts "#{profile} exists on GitHub" if result[profile]

puts "#{profile} does not exist on GitHub" unless result[profile]

end

# Write the result to a JSON file

File.open("result.json","w") do |f|

f.write(result.to_json)

end

```

The generated code is synatically invalid, since `client` variable is undefined in 21

**Prompt**

```

I get the error when I run the above code, Traceback (most recent call last):

3: from case_3.rb:38:in `<main>'

2: from case_3.rb:38:in `each'

1: from case_3.rb:39:in `block in <main>'

case_3.rb:21:in `check_rate_limit': undefined local variable or method `client' for main:Object (NameError)

```

**Generated Code**

```ruby

require 'octokit'

require 'json'

# Read profile names from text file

def read_profiles

File.readlines("/usr/share/dict/words").map(&:chomp)

end

# Check if user exists on GitHub

def check_user(client, user)

begin

client.user(user)

true

rescue Octokit::NotFound

false

end

end

# Check for rate limit and wait if necessary

def check_rate_limit(client)

rate_limit = client.rate_limit

if rate_limit.remaining == 0

puts "Rate limit exceeded. Waiting for reset at #{rate_limit.resets_at}..."

sleep rate_limit.resets_in

end

end

# Read access token from environment variable

access_token = ENV["ACCESS_TOKEN"]

client = Octokit::Client.new(access_token: access_token)

# Create a new hash to store the result

result = {}

profiles = read_profiles

profiles.each do |profile|

check_rate_limit(client)

result[profile] = check_user(client, profile)

puts "#{profile} exists on GitHub" if result[profile]

puts "#{profile} does not exist on GitHub" unless result[profile]

end

# Write the result to a JSON file

File.open("result.json","w") do |f|

f.write(result.to_json)

end

```

Fix message is better: `The error occurred because the check_rate_limit and check_user functions did not have access to the client object. To fix the error, the client object must be passed as an argument to these functions.`

**Output**

```

$ruby case_3.rb

To use retry middleware with Faraday v2.0+, install `faraday-retry` gem

Rate limit exceeded. Waiting for reset at 2022-12-04 19:46:29 +0000...

```

# Case 5: Write the test cases

**Prompt**

```

Can you write the test cases for the above code?

```

**Generated Code**

```ruby

require 'minitest/autorun'

require 'octokit'

require 'json'

class TestGitHubProfileChecker < Minitest::Test

def setup

@access_token = ENV["ACCESS_TOKEN"]

@client = Octokit::Client.new(access_token: @access_token)

end

def test_read_profiles

expected_profiles = ["test1", "test2"]

File.stub :readlines, expected_profiles do

assert_equal expected_profiles, read_profiles

end

end

def test_check_user_exists

user = "octocat"

assert check_user(@client, user)

end

def test_check_user_does_not_exist

user = "not_a_real_user"

refute check_user(@client, user)

end

def test_check_rate_limit

rate_limit = @client.rate_limit

rate_limit.stub :remaining, 0 do

rate_limit.stub :resets_at, Time.now do

rate_limit.stub :resets_in, 0 do

assert_output "Rate limit exceeded. Waiting for reset at #{rate_limit.resets_at}...\n" do

check_rate_limit(@client)

end

end

end

end

end

end

```

After adding the previously generated code, a test case failed.

```

ruby test_case.rb

To use retry middleware with Faraday v2.0+, install `faraday-retry` gem

Run options: --seed 34383

# Running:

..F.

Finished in 0.782971s, 5.1087 runs/s, 5.1087 assertions/s.

1) Failure:

TestGitHubProfileChecker#test_check_rate_limit [test_case.rb:83]:

In stdout.

--- expected

+++ actual

@@ -1,2 +1 @@

-"Rate limit exceeded. Waiting for reset at 2022-12-04 20:24:05 +0000...

-"

+""

4 runs, 4 assertions, 1 failures, 0 errors, 0 skips

```

Looking at the code, rate limit code was stubbed but doesn't behave as expected. Again updating the prompt, a couple of times,

the model produces same test code and it is unable to fix it. After multiple prompts, the model stop generating complete code.

**Final generated code**

```ruby

require 'minitest/autorun'

require 'octokit'

require 'json'

class TestGitHubProfileChecker < Minitest::Test

def setup

@access_token = ENV["ACCESS_TOKEN"]

@client = Octokit::Client.new(access_token: @access_token)

end

def test_read_profiles

expected_profiles = ["test1", "test2"]

File.stub :readlines, expected_profiles do

assert_equal expected_profiles, read_profiles

end

end

def test_check_user_exists

user = "octocat"

assert check_user(@client, user)

end

def test_check_user_does_not_exist

user = "not_a_real_user"

refute check_user(@client, user)

end

def test_check_rate_limit

now = Time.now

rate_limit = Octokit::RateLimit.new(remaining: 0, resets_at: now.to_i, resets_in: 0)

@client.stub :rate_limit, rate_limit do

assert_output "Rate limit exceeded. Waiting for reset at #{now}...\n" do

check_rate_limit(@client)

```

**Final conversation**

```

Me: How many lines of code are you allowed to generate in a given time?

Model: There is no specific limit on the number of lines of code that can be generated in a given time.

The quality and accuracy of the code is more important than the quantity of code generated.

Me: But your previous code generation was incomplete. can you tell me what happened?

Model: I apologize if my previous code generation was incomplete. There can be a number of reasons why this may have happened.

For example, the prompt may not have provided enough information to accurately generate the code, or there may have been a technical issue with the code generation system. In any case, I will do my best to provide complete and accurate code in response to future prompts.

```

# Takeway

- Given enough context and iteration the model is able to correct the simple errors.

- It take many attempts to get something working, but it's far away from production quality code(could be pure model safe-guard).

- It's able to remember the previous context and improvise based on feedback.

- Once things get slightly complicated the model fails to generate syntactically correct code.

It was definitely fun to play around with the model but making the model to produce fool proof code seems very far away.

- [Python 3.11 micro-benchmark](https://kracekumar.com/post/micro-benchmark-python-311/index.md): Python 3.11 micro-benchmark

---

title: "Python 3.11 micro-benchmark"

date: 2022-10-31T12:14:14Z

draft: false

---

[speed.python.org](https://speed.python.org/comparison/) tracks Python module performance improvement

against several modules across Python versions.

In the real world, the module level speed improvements don't

directly translate to application performance improvements.

The application is composed of several hundreds of dependencies

the performance of one specific module doesn't improve total

application performance. Nonetheless, it can improve performance

parts of the API or certain flows.

When I first heard the [faster CPython initiative](https://github.com/faster-cpython/ideas), I was intrigued to

find out, how does it translate to small application performance across

various versions since a lot of critical components are already in C

like Postgresql driver. The faster CPython presentation clear states,

the performance boost is only guaranteed for pure python code and

not C-extensions.

In this post, I'll share my benchmark results on a couple of hand picked snippets.

There is a PyPI package data, do some transformation or do some network operations

or file operations. How does that perform against different Python versions.

# Setup

- The benchmark was run on `Intel 11th Gen, i7 @ 2.30GHz` with 16 cores.

During entire benchmark no other user initialized programs was run like browser or text editor.

- The benchmark result was measured using `hyperfine` [command line tool](https://github.com/sharkdp/hyperfine) with `--warmup 1` flag and 10 runs for each version.

- No CPU pinning during benchmark.

- Python 3.9.13, Python 3.10.5, Python 3.11.0 versions were used for benchmarking.

- Median of 10 runs is used over mean.

Here is the result of the benchmark.

``` bash

Python performance - 3.9 vs 3.10 vs 3.11

┏━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━┓

┃ Name ┃ Median 3.9 (s) ┃ Median 3.10 (s) ┃ Median 3.11 (s) ┃ 3.11 Change ┃

┡━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━┩

│ pypicache │ 7.4096 │ 7.2654 │ 6.9122 │ 6.71% │

│ pypi_compression │ 57.2634 │ 57.3878 │ 57.3969 │ -0.23% │

│ pypi_postgres │ 11.4657 │ 11.3525 │ 11.1345 │ 2.89% │

│ pypi_sqlite_utils │ 35.6113 │ 34.8789 │ 34.3522 │ 3.54% │

│ pypi_write_file │ 17.7075 │ 17.2318 │ 16.7363 │ 5.48% │

│ pypi_write_file_parallel │ 12.7005 │ 13.0702 │ 12.5040 │ 4.33% │

│ pypi_zstd_compression │ 1.4794 │ 1.4687 │ 1.4643 │ 1.02% │

└──────────────────────────┴────────────────┴─────────────────┴─────────────────┴─────────────┘

```

# Experiments

## PyPI Cache

``` python

import json

from operator import itemgetter

from urllib.parse import urlparse

from rich.console import Console

from rich.table import Table

from collections import defaultdict

def get_domain(addr):

domain = urlparse(addr)

return domain.netloc

def count_domains(data):

result = defaultdict(int)

for package in data: